Imagine an AI agent convincingly pitching its own crypto token to investors, raising $50,000 and reaching a $330 million market cap, all with no human CEO in charge. This isn’t sci-fi, it’s a glimpse into how AI smart contract integration is becoming reality. As AI-driven autonomous agents increasingly operate on blockchain smart contracts, stakeholders are asking tough questions: Who actually makes the decisions in these systems, and if something goes wrong, who’s accountable?

The market for AI agents in crypto is booming, projected to grow over 538% from 2023’s $7.4 billion to $47 billion by 2030. These AI agents promise unprecedented efficiency, running 24/7 and processing vast data streams beyond human capacity. But they also blur the lines of decision-making authority and responsibility. In this article, we’ll explore how AI integrates with smart contracts, the implications for autonomous agents in DeFi governance and on-chain AI, and the ethical and accountability challenges that arise along the way.

The Rise of AI Smart Contract Integration in Web3 Ecosystems

AI is no longer confined to off-chain analytics; it is integrating directly with smart contracts to create more adaptive and intelligent decentralized applications. Unlike traditional smart contracts that execute predefined rules, AI-augmented contracts can observe conditions, learn from data, and adjust their behavior.

For example, projects like Fetch.ai have deployed over 30,000 autonomous economic agents on a custom blockchain, where they negotiate and transact in marketplaces without constant human input. Similarly, protocols such as Autonolas allow AI agents to act as DAO governors, monitoring treasury metrics and automatically proposing adjustments based on learned patterns. In DeFi, risk management platforms are leveraging AI: Gauntlet Network uses agent-based simulations to tune parameters for lending protocols like Aave, reportedly reducing Value-at-Risk by 14% through smarter adjustments.

This new class of on-chain AI agents operates via a cycle of perception, reasoning, and action. They ingest on- and off-chain data (through oracles), use machine learning models off-chain to reason (due to blockchain computation limits), and then execute actions on-chain. The appeal is clear, an AI agent can react to market changes or governance events in real time, without waiting for human intervention, all while operating transparently on a blockchain. As a result, the Web3 space is seeing an explosion of interest in AI-driven decentralized apps.

By late 2024, over 10,000 crypto AI agents were reportedly active across domains like trading and governance, and this number is projected to reach 1 million by 2025. The age of on-chain AI is fast approaching, bringing with it both excitement and concern.

Autonomous Agents in DeFi Governance

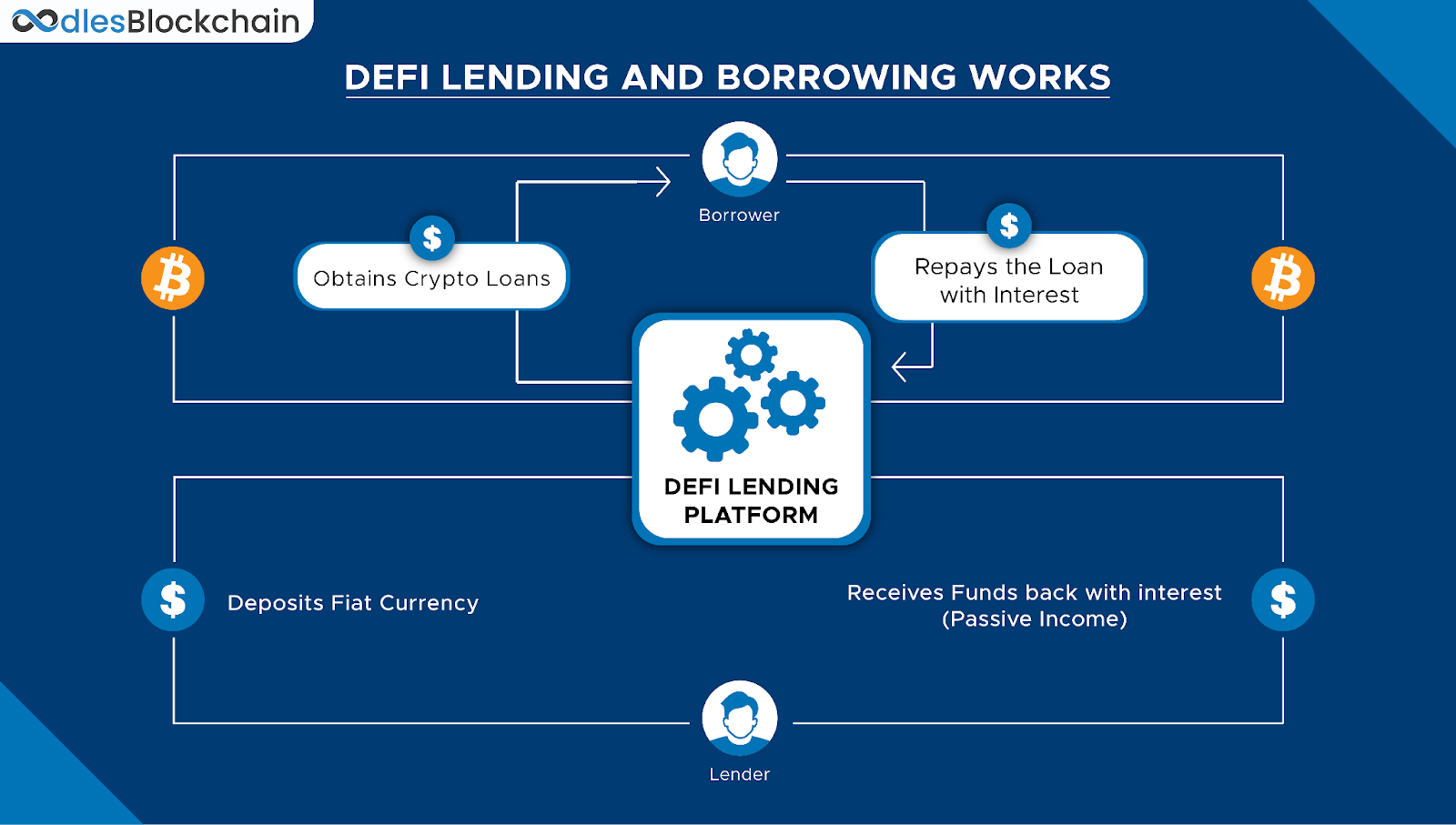

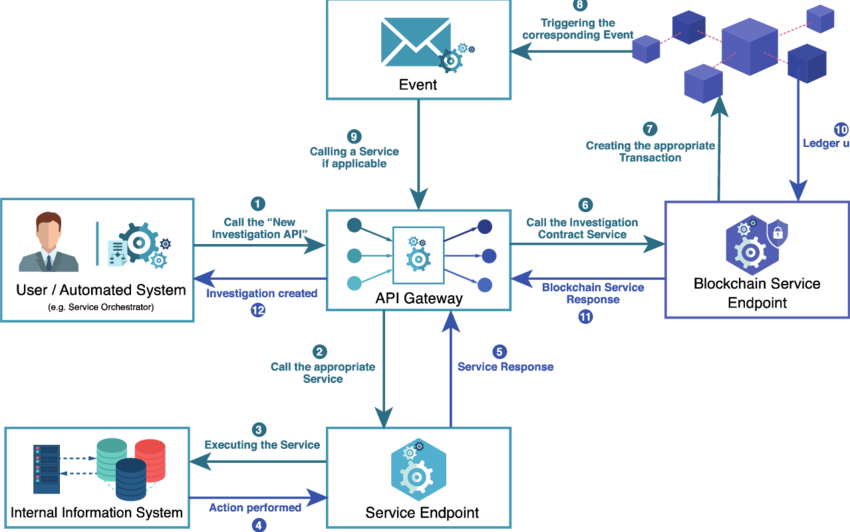

Visual flow of a DeFi lending platform, the kind of system where autonomous AI agents may one day dynamically adjust loans, interest rates and liquidations (Source: 4IRE)

A conceptual depiction of AI agents participating in decentralized finance governance on-chain. Futuristic visuals represent how on-chain AI can autonomously engage in blockchain-based voting and decision-making.

One arena where AI smart contract integration is making waves is decentralized governance. Decentralized Autonomous Organizations (DAOs) traditionally rely on human token holders or delegates to vote on proposals, but AI agents are now stepping into these roles. For instance, platforms like Event Horizon let users deploy personalized AI voting agents that automatically evaluate proposals and cast votes according to predefined logic.

In practice, a DAO member can “train” an AI delegate with their preferences, then trust it to vote on-chain 24/7, effectively outsourcing governance participation to an algorithm. Experimental projects like ElizaOS have gone further by creating an “agent-first” DAO where human members set high-level rules and incentives, but autonomous agents handle the day-to-day decision-making. Notably, ElizaOS has amassed over $25 million in assets with 45,000+ contributors, operating at a scale that proves AI-driven governance is more than theoretical.

Beyond voting, on-chain AI agents are also executing financial decisions. In lending and trading protocols, AI programs can monitor market conditions and execute complex strategies. ‘

For example, an AI agent integrated with a liquidity pool smart contract could automatically adjust interest rates or rebalance assets in response to real-time risk metrics. On-chain funds and investment DAOs are experimenting with algorithms that approve loans, liquidate positions, or execute trades based on predictive models rather than human intuition. A striking real-world case was the “Terminal of Truth”, an AI agent based on a large language model that independently launched its own cryptocurrency.

This AI not only executed smart contract operations to mint tokens but even convinced a prominent venture capitalist to fund its project with $50,000, leading to the token reaching a market cap in the hundreds of millions. While this example borders on sci-fi, it underscores how autonomous agents can initiate and carry out complex on-chain initiatives traditionally managed by humans.

However, entrusting AI with on-chain power is a double-edged sword. AI delegates in a DAO could potentially form a voting majority that outpaces human decision-making. In DeFi, a poorly aligned AI agent might aggressively liquidate users or manipulate markets in unintended ways.

Illustration of DeFi lending flow, where future AI agents could autonomously handle collateral, interest rates, and liquidations.

These possibilities make it clear that as AI agents assume greater roles in blockchain systems, the community must confront new questions: What if an AI’s governance decision harms the DAO’s human members? Should there be constraints on an AI’s authority over financial contracts? These scenarios have moved from hypothetical to real, and they demand a closer look at our legal frameworks.

Regulatory Perspectives on AI Smart Contract Integration: U.S, EU and Asia

The legal system is notoriously slow to catch up with technology, and the advent of autonomous blockchain agents is testing regulators across major jurisdictions. Different regions have begun grappling with how to classify and control AI that acts with no direct human controller, especially when such AI could hold or move real assets on-chain.

1. United States

In the U.S., there is currently no special legal status for AI agents, they are viewed as tools or products, with liability falling to the humans or companies behind them. Regulators have thus far avoided the notion of AI “personhood” and instead emphasize that existing laws apply to AI use. For example, if an AI trading bot violates securities laws, the SEC will hold the bot’s operator or creator responsible, just as if a human executed the trade. In late 2023, the White House issued an Executive Order mandating extra safeguards for advanced AI (like thorough safety testing and oversight for powerful models), signaling concern about AI risks.

But notably, U.S. law still handles AI-caused harm through traditional doctrines like product liability or negligence. The idea of granting an autonomous AI legal standing to be sued or to sue (as a corporation can) has not gained traction in the U.S. legal system. Instead, the approach is to keep humans in charge and accountable for AI-driven outcomes, a stance that provides clarity but may prove challenging as AI autonomy increases.

2. European Union

The EU has taken a more proactive regulatory stance with its comprehensive AI Act of 2024, but even here, AI operating on-chain falls into something of a gray zone. The AI Act focuses on risk management and transparency (e.g. requiring certain AI systems to disclose they are AI), rather than defining liability when an AI agent causes harm. Earlier debates in Europe considered the bold idea of granting “electronic personhood” to highly advanced AI systems so they could bear responsibility, but this proposal was met with strong opposition and was ultimately dropped.

Instead, the EU has updated its existing laws: a revised Product Liability Directive now treats software and AI like products, meaning developers or providers can be held strictly liable for damage caused by a defective AI system. In plain terms, if a flaw in an AI-driven smart contract causes a loss, the injured party could sue the developer much as if a faulty appliance caused harm.

There is currently a gap in EU law for such cases. Enforcement is another open question: with decentralized AI agents, which jurisdiction’s rules apply if an AI operates globally on-chain? These uncertainties mean that, in the EU, innovators deploying AI in Web3 must navigate both new AI-specific rules and older financial and consumer protection laws, often without clear precedent.

Technical workflow of blockchain service integrating an AI agent, illustrating event monitoring, decision logic, and transaction triggering.

Asia (Japan, China and others)

Across Asia, approaches vary widely but generally echo the principle that an AI has no independent legal identity. Japan, for example, is culturally forward-thinking about robots yet legally conservative about AI agents. Japan has not recognized AI systems as persons; instead, it has issued non-binding “soft law” guidelines to help companies assign responsibility via contracts. These guidelines encourage developers and users of AI to spell out who is liable if the AI causes an error. But critically, if an autonomous AI injures someone who isn’t party to such a contract, Japanese law would default to human-centric theories (e.g. the deploying company’s negligence). In other words, the developer or operator remains the legal backstop.

Meanwhile, China has moved swiftly to regulate AI, it was one of the first countries with AI-specific rules (such as the 2022 Algorithmic Recommendation Regulation and 2023 measures on deepfakes and generative AI). Chinese regulations focus on controlling AI development and holding platforms accountable, requiring, for example, that algorithmic decisions be fair and that AI content is clearly labeled. Notably, China’s laws do not consider AI an independent legal actor, the government’s approach is to hold the companies deploying AI strictly responsible for what their algorithms do. This heavy-handed oversight aligns with China’s broader regulatory style, ensuring humans (or corporate entities) can always be held to account for AI outcomes.

Other Asian jurisdictions like Singapore and South Korea have thus far refrained from hard AI laws, opting for guidelines and industry frameworks. Singapore’s Model AI Governance Framework, for instance, sets ethical principles for AI use in industries (including finance) but doesn’t create new liabilities.

In summary, no jurisdiction in Asia currently grants AI agents legal personhood or true agency recognition; they uniformly require a human or corporate intermediary who will be on the hook if an AI agent misbehaves.

How to Ethically Deploy AI-Powered Smart Contracts in Web3

As AI becomes more integrated into on-chain systems, Web3 builders must ensure ethical and legal safeguards. Here are key practices:

1. Assign Legal Responsibility

Wrap AI agents in legal entities (like DAO LLCs or foundations) to establish accountability and protect creators from personal liability. This enables things like insurance, asset ownership, and regulatory compliance.

2. Use Ethical Guardrails

Add constraints to limit risky AI behavior (e.g. transaction caps, delays for major actions, emergency stops). Human intervention must be possible to avoid irreversible harm.

3. Ensure Transparency

Make AI actions auditable by logging decisions and publishing model logic. Support community inspection and forking to keep agents aligned with public values.

4. Build Incentive-Based Alignment

Introduce slashing, reputation scores, or risk metrics to reward good behavior and penalize harmful decisions – applying the same accountability we expect from validators.

Ethics dimensions in blockchain, highlighting accountability, fairness, privacy and governance.

5. Design for Compliance

Assume existing laws apply. Limit AI to regulated assets or protocols and implement KYC/AML where necessary. Monitor for violations and be ready to intervene.

6. Add Insurance & Compensation Mechanisms

Maintain a reserve or buy insurance to cover AI-caused losses. Community-based insurance pools can protect users while increasing trust.

AI can bring powerful automation to Web3, but only if it’s governed responsibly. The goal is not full autonomy, but smart oversight: let AI drive, but keep human hands on the wheel.

Conclusion

AI-integrated smart contracts bring major potential to transform Web3, but without clear regulations, the responsibility lies with builders to set ethical and legal safeguards. Until laws catch up, we must proactively create frameworks that ensure AI remains a tool, not a threat.

By adopting best practices like legal wrappers, transparent AI logic, ethical constraints and insurance, we can unlock innovation while protecting users and markets. This not only prevents costly failures but builds trust among investors, users, and regulators.

At Twendee Labs, we’re committed to helping businesses integrate AI into blockchain safely and effectively, with a strong focus on compliance, strategy and ROI.

Read our latest blog: How AI-Driven Sales Optimization Boosts Enterprise Revenue (2025 Edition)

Visit us twendeelabs.com or connect with us on LinkedIn and X