Internal tools are the unsung heroes of enterprise agility. From internal dashboards and AI agents to low-code automation workflows, these systems drive productivity behind the scenes. Yet, many of them operate outside the purview of traditional security frameworks. And that’s where the danger lies.

In 2025, the real risk isn’t just exposed APIs or missing patches, it’s the silent sprawl of internal systems built faster than they can be secured. These tools often have privileged access, limited observability, and insufficient governance. This blog examines the most critical mistakes that quietly turn internal tool ecosystems into high-risk environments.

Internal Tool Security in 2025: A Perfect Storm of Velocity, Visibility, and AI

In 2025, internal systems aren’t just support tools, they’re the engine room of digital operations. But as organizations embrace rapid innovation, internal toolchains have grown sprawling, autonomous, and increasingly opaque. What was once a handful of internal dashboards is now a loosely governed ecosystem of no-code interfaces, internal APIs, workflow engines, and embedded AI agents often launched without a formal security review.

Three forces converge to create this perfect storm

1. Velocity Over Verification

Business units now build faster than IT can govern. Low-code platforms and citizen development initiatives have enabled marketing, HR, and operations teams to spin up internal tools without engineering or security involvement. According to Gartner, by 2025, 70% of new business applications will be created by users outside the IT department.

What was once a controlled SDLC is now a distributed development model where tool deployment precedes policy. These systems often bypass traditional review processes: no threat modeling, no access boundary definition, no logging strategy. Even when well-intentioned, they introduce components that interact with production data or internal workflows without ever passing through formal change control.

In essence, speed becomes a threat multiplier especially when internal tools directly touch data, automate actions, or act on behalf of the organization.

Key risks from this shift:

- Internal tools ship with default access roles, often granting edit or admin rights by convenience.

- Security architecture becomes reactive, addressing issues only after tools are in production or exploited.

2. Vanishing Visibility

Unlike production systems, internal tools often fall outside of centralized monitoring. They’re less likely to be registered in CMDBs, audited for compliance, or integrated into SIEMs. This creates what security teams refer to as “invisible infrastructure” software that holds sensitive logic but flies under the radar. IBM’s 2024 breach response report noted that breaches involving internal applications took 38% longer to detect and resolve, largely due to lack of visibility and logging.

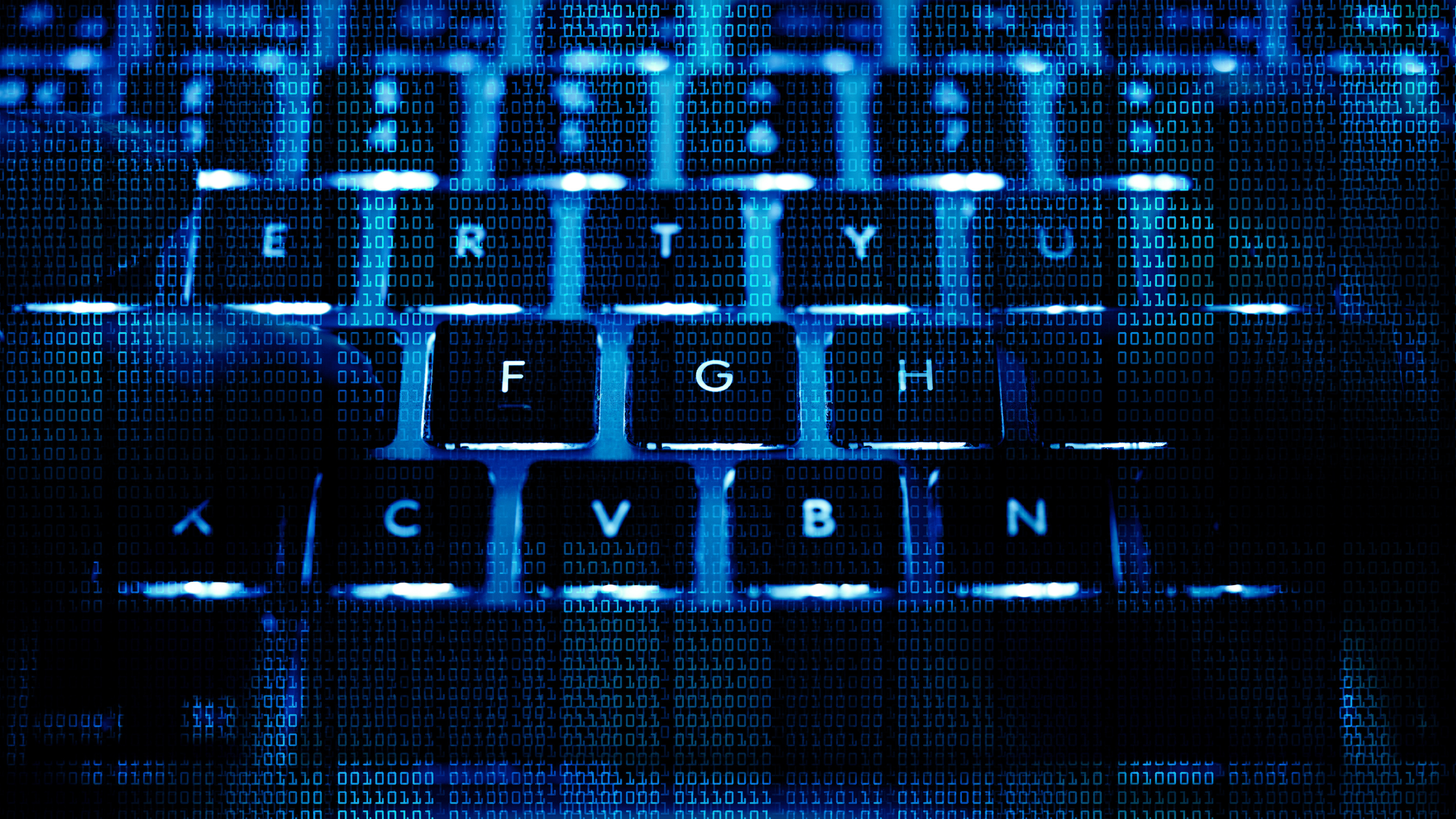

Global infostealer activity by family and affected hosts (Source: Flashpoint, 2024)

Internal dashboards, low-code tools, and internal AI agents frequently store or reuse credentials without MFA or centralized oversight turning them into silent entry points. This reinforces IBM’s finding that breaches involving internal systems take 38% longer to detect and resolve, primarily due to lack of auditability and logging.

A key example of this hidden risk comes from credential theft. According to Flashpoint’s Infostealer Quickview 2024 Midyear, over 53 million credentials have been stolen through malware like Redline, Stealc, and Vidar often harvested from browser-saved logins and under-secured internal systems.

3. Autonomous AI Agents

AI-native internal tools are no longer experimental, they’re operational. From in-house copilots that assist with reporting to workflow agents that initiate API calls or approve requests, these systems now act as semi-autonomous actors inside the enterprise stack.

What makes them uniquely risky is not just their power, it’s the lack of predictable behavior. Large Language Models (LLMs) can rephrase, summarize, or generate actions based on ambiguous prompts, drawing on training data that security teams have no visibility into. The more tightly integrated they become with business logic, the greater the potential blast radius of a flawed decision or a hallucinated output.

This isn’t hypothetical. In real internal use cases, AI agents have:

- Responded to malformed queries by returning confidential database rows not explicitly requested.

- Composed system-level code changes that passed automated tests but introduced policy violations.

- Interpreted vague instructions as authorization to perform irreversible actions like disabling accounts or altering approval workflows.

Worse still, many of these agents operate without centralized logging or execution tracing. When something goes wrong, there’s no clear way to audit what the model “intended” versus what it actually did. The result is a governance vacuum where human oversight is either absent or added too late in the loop.

Mistake #1: No Access Control on No-Code and Internal Dashboards

The democratization of app building has empowered teams to create fast internal tools but often without security in mind. Tools like Airtable, Retool, and Notion are widely used to manage workflows, datasets, and decision logic.

The risk: Without enforced access control, these platforms can expose critical data and business logic to users who shouldn’t have access.

Case in point: In 2023, an Airtable base used by a U.S. healthcare nonprofit was left publicly accessible due to default sharing settings. The dataset included names, appointment data, and sensitive health information. The breach stemmed not from hacking, but from unchecked permissions.

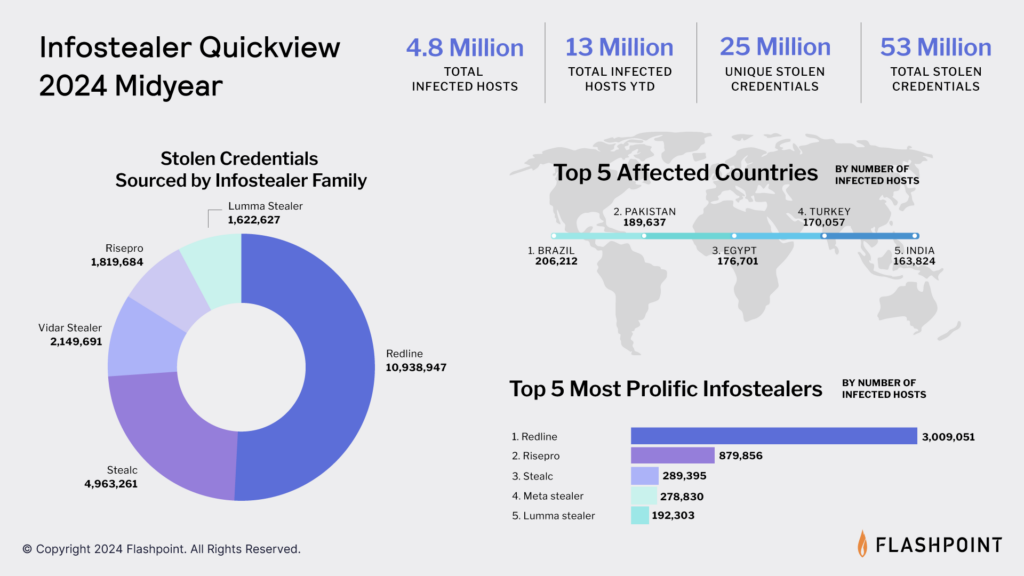

Diagram comparing effective access control vs. broken access control (Source: Cheap SSL Certificates)

This is a classic example of broken access control, a top OWASP security risk that often goes unnoticed in internal apps. Without centralized policy enforcement, internal tools can grant far more access than intended, exposing systems to insider misuse or credential-based attacks.

Gartner predicts that by 2026, 75% of enterprises will use no-code platforms, but over 60% will lack centralized access governance.

Mitigation:

Every internal tool no matter how small should adhere to Role-Based Access Control (RBAC), integrate with company SSO, and restrict edit rights by default.

Mistake #2: Letting AI Agents Act Without Observability

As companies adopt internal AI agents to write code, respond to queries, or automate workflows, they enter uncharted territory. These agents can manipulate files, change configs, or initiate actions—often without clear boundaries.

The risk: AI agents may hallucinate dangerous outputs, leak sensitive data via responses, or act beyond their intended scope if not strictly governed.

Case: A fintech firm using GitHub Copilot for internal DevOps discovered that the AI was suggesting code snippets containing hardcoded credentials—likely derived from previously exposed training data. These suggestions were deployed into staging, exposing secrets before discovery during manual audit.

Microsoft’s 2024 report found that 12% of AI-generated internal changes led to misconfigurations or permission issues.

Mitigation:

Apply the principle of least privilege to AI agents. Require logging for every actionable output. Ensure human review before agents execute any sensitive action.

Related Reading: Top 10 Internal Automation Tools Saving Startups Millions in 2025

Mistake #3: Ignoring the Scope and Scale of Shadow IT

As departments move quickly, they often deploy their own SaaS tools, integrate third-party plugins, or create automations without IT’s knowledge. This decentralized build culture introduces critical blind spots.

The risk: These tools operate without patching schedules, security monitoring, or access reviews—and they often store sensitive data.

Case: Employees at the Canadian Revenue Agency once used an unvetted SaaS tool to process claims faster. That tool was later found to contain malware, entering the network via an unmonitored integration.

Cisco’s 2023 Shadow IT study revealed that over 80% of enterprise cloud app usage is unsanctioned.

Mitigation:

Use SaaS discovery tools or CASB solutions to surface unknown tools. Establish a policy that encourages transparency and provides vetted, secure internal tooling alternatives.

Mistake #4: Missing Audit Trails on Internal Systems of Record

Shadow IT isn’t just rogue behavior, it’s now the default in fast-moving teams. When procurement cycles are slow and internal tooling feels restrictive, employees turn to SaaS platforms, browser extensions, AI plugins, and low-code tools to get work done. Most of it flies under IT’s radar.

What It Looks Like:

- A marketing team links Google Sheets to an unvetted automation tool to handle lead enrichment.

- A customer success manager builds a Retool app to manage refund approvals without logging or review.

- A finance team exports customer data into a third-party budgeting dashboard hosted overseas.

These tools don’t go through security onboarding. They often store or access real data, but lack patching schedules, authentication controls, or internal visibility.

Why It Matters:

- Attackers don’t distinguish between sanctioned and shadow tools, they exploit whatever has weak security.

Cisco’s 2023 Security Report found that 80% of enterprise cloud usage occurs through apps not approved by IT, exposing sensitive workflows to third-party risk.

Real-World Case:

At the Canada Revenue Agency, employees adopted an external SaaS tool to streamline claims processing. The software unapproved and unmonitored was later found to contain malware, compromising internal systems through an unmanaged integration point.

What to Do:

- Deploy SaaS discovery tools or CASBs to detect unsanctioned apps.

- Regularly reconcile internal workflows with tool usage logs to surface hidden dependencies.

- Most importantly, shift from punishment to enablement provide secure, vetted alternatives that match team needs before shadow tools proliferate.

In 2025, the real risk isn’t that people are bypassing process, it’s that security hasn’t kept pace with how teams work. Shadow IT isn’t just unapproved apps, it’s every login outside the visibility of SSO, every team-level automation that no one reviews.

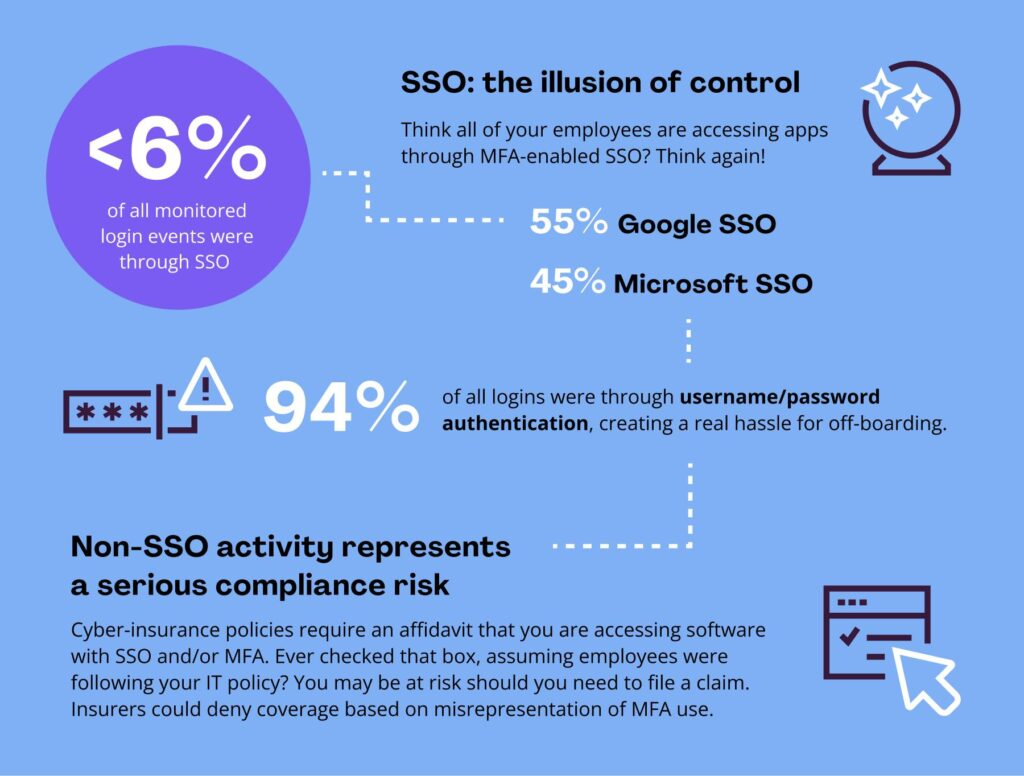

94% of logins still bypass SSO, creating blind spots and compliance risk (Source: Auvik Networks)

According to Push Security, 94% of logins still rely on basic username/password auth, and less than 6% go through managed SSO creating massive gaps in offboarding, visibility, and insurance compliance. This underscores how easily internal tools especially unsanctioned ones become invisible threats.

Mistake #5: Treating Internal Dev Tools as Outside the Threat Model

Security strategies often center around production systems and customer data and rightly so. But the tools developers use to build, test, and monitor those systems are frequently excluded from the same rigor. CI/CD dashboards, observability platforms, internal staging environments, and developer admin panels are treated as “internal conveniences” not critical assets.

That assumption creates blind spots. These internal tools often have elevated permissions, service account tokens, hardcoded secrets, or unprotected APIs all highly attractive to attackers seeking lateral movement opportunities.

Security isn’t just about what you protect from the outside, it’s about what you unintentionally expose from the inside.

Real-World Breakdown:

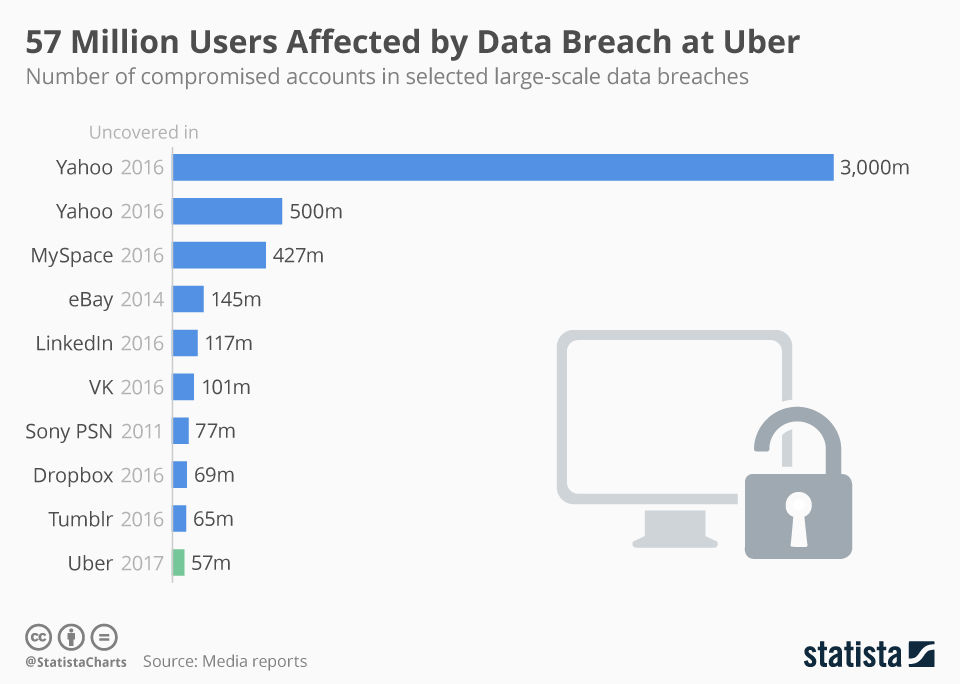

In Uber’s 2022 breach, attackers gained initial access via social engineering and then exploited an internal monitoring dashboard that lacked MFA and proper access restrictions. From there, they moved laterally into sensitive cloud environments. The compromise wasn’t the result of a zero-day — it was enabled by unreviewed access paths in overlooked tools.

Uber’s 57M-user data breach alongside other major global breaches (Source: Statista, 2017)

Uber’s breach exposed personal data of 57 million users, including names, phone numbers, and driver information not through external attack surfaces, but via an internal tool with poor access control.

This incident underscores the strategic risk of underestimating internal devtools, their reach often exceeds their perceived sensitivity.

Why This Happens:

- Internal tools are often deployed fast, owned by infra teams, and left outside formal AppSec coverage.

- Role scoping is minimal devs default to admin, service accounts remain unrestricted.

- Tools aren’t regularly tested for abuse paths because they’re “not customer-facing.”

What to Do Instead:

- Enforce MFA and role-based access across all internal developer tools even in staging.

- Isolate CI/CD, observability, and configuration systems in separate network segments.

- Extend DevSecOps coverage to include internal platform reviews, not just app scans.

- Treat internal tools as you would APIs: version-controlled, authenticated, auditable.

Developer experience should never come at the cost of enterprise security posture. In a lateral movement scenario, an unsecured staging dashboard is as dangerous as an exposed API.

Conclusion: Don’t Let Internal Innovation Become a Liability

Internal tools have become core to how modern companies operate from no-code dashboards and AI agents to developer pipelines. But in too many organizations, they remain unseen by security, unmanaged by policy, and underestimated in risk.

The mistakes outlined here aren’t technical oversights, they’re structural blind spots. Tools with privileged access are being deployed without access control, audit trails, or visibility. And attackers are taking notice.

If a tool can act, it must be governed. If it stores or routes data, it must be secured. In 2025, internal tool security is no longer optional infrastructure hygiene, it’s business continuity.

Explore more secure automation strategies at Twendee Labs or connect directly on LinkedIn and X.