In the age of widespread artificial intelligence adoption, enterprise leaders often face a critical question: should they rely on human-in-the-loop AI or opt for automated AI systems? This choice fundamentally determines how much human control is built into AI-driven processes, and getting the balance right is vital for effective AI governance and AI risk management. On one hand, fully automated AI promises speed and efficiency; on the other, human-in-the-loop AI offers oversight and accountability to mitigate risks.

This blog post explores the differences between these approaches, their pros and cons, and why a balanced strategy can help businesses harness AI’s potential while avoiding pitfalls.

Understanding Human-in-the-Loop vs. Fully Automated AI

A human-in-the-loop (HITL) AI system is one where human oversight or intervention is an essential part of the process. Rather than leaving all decisions to machines, HITL ensures people remain involved – supervising outputs, providing input or corrections, and making final decisions when necessary. The “loop” can take many forms, from humans labeling training data and tuning models to experts reviewing AI outputs in real time and intervening in ambiguous or high-stakes cases. In short, human-in-the-loop AI combines the strengths of humans and machines to create smarter, more reliable outcomes.

In contrast, a fully automated AI system operates with no human intervention once it’s deployed. These systems are designed to run independently, detecting situations, making decisions, and taking actions on their own. Fully automated AI can process information and execute tasks at a much faster rate than any human-involved process. This makes them highly efficient and scalable across large datasets or user bases. However, by removing human oversight entirely, fully automated systems can be rigid and “context-blind” – they may not adapt well to novel or complex scenarios and can make high-impact mistakes if faced with situations they weren’t trained for.

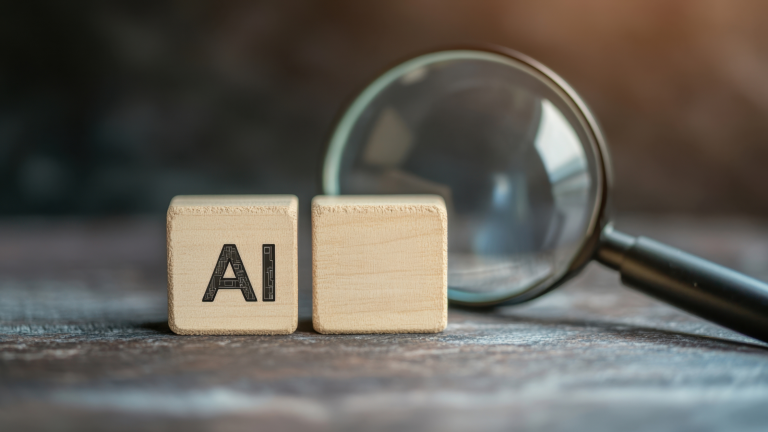

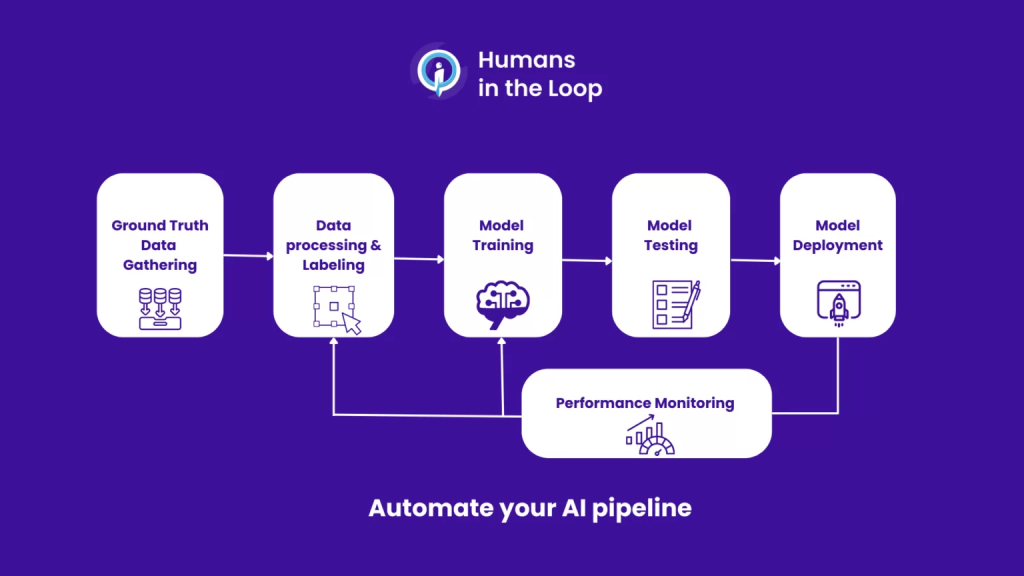

Human-in-the-loop AI pipeline illustrating how human oversight is embedded across data collection, labeling, model training, testing, deployment, and continuous performance monitoring to ensure reliability and control (Source: Scale AI)

Key Differences Between Human-in-the-Loop and Fully Automated AI

To illustrate the boundary between human-controlled and fully automated AI in business, here are some key points of comparison:

- Speed and Efficiency: Fully automated AI systems can operate and make decisions much faster than human-in-the-loop systems, which must pause for human review or input. Automation enables rapid, 24/7 processing of data and tasks, whereas involving humans can introduce delays and slower throughput. However, speed isn’t everything – a slightly slower process may be acceptable if decisions require careful deliberation.

- Scalability: Automated AI solutions scale effortlessly across large volumes of data or transactions since they aren’t limited by human capacity. Human-in-the-loop approaches, by contrast, are constrained by human resources; adding more volume often means hiring or assigning more people, which can become a bottleneck. For instance, a fully automated customer service chatbot might handle thousands of queries simultaneously, whereas a human-reviewed system would need an army of moderators to match that scale.

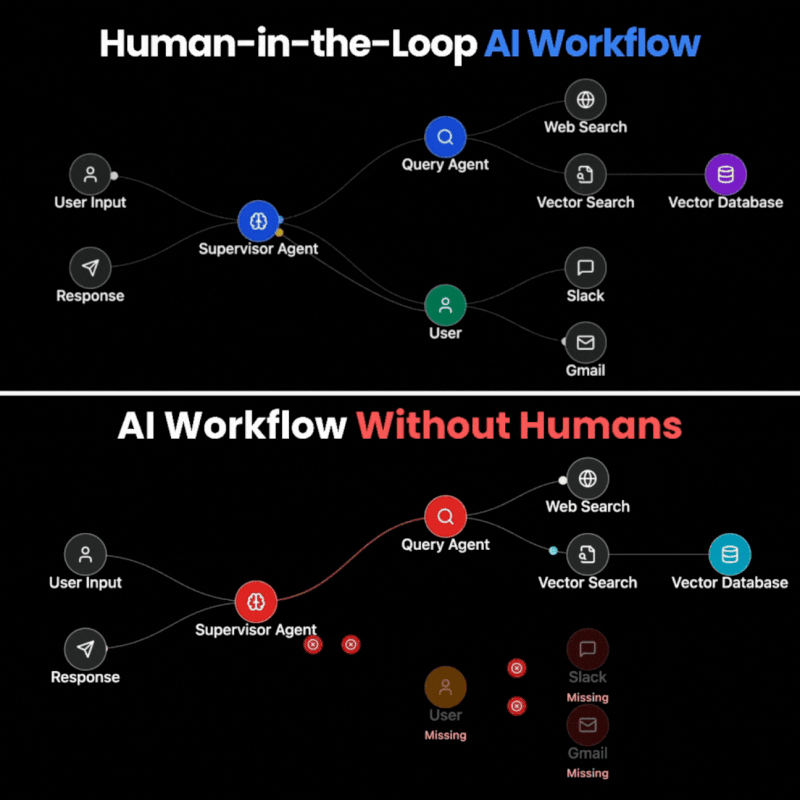

Side-by-side comparison of an AI workflow with human-in-the-loop oversight versus a fully automated AI workflow, highlighting how human supervision ensures decision validation, contextual judgment, and operational continuity across search, communication, and response layers (Source: LangGraph)

- Operational Cost: Fully automated systems may involve high up-front investment in development and infrastructure, but they have lower ongoing labor costs since minimal staff are required to run them. Human-in-the-loop AI incurs continuous personnel costs, as experts or reviewers must be paid to oversee the AI’s decisions. Businesses must weigh these cost differences when deciding on AI implementation.

- Risk Management and Error Handling: Human-in-the-loop AI provides superior risk mitigation in critical areas because humans can catch and correct errors or bad decisions before they cause harm. If an AI system is about to make a questionable call for example, flagging a benign transaction as fraud – a human reviewer can intervene. Fully automated AI lacks this safety net; if it encounters an edge case or makes a wrong judgment, it might fail silently or cause an incident without immediate detection. This means fully automated systems carry a higher risk of unmitigated errors in complex or high-stakes operations.

- Accountability and Transparency: With human involvement, there is a clear line of accountability – a person has validated or made each key decision. This is crucial for compliance and governance. Human reviewers can also provide explanations for why a decision was made, improving transparency. In a fully automated system, decisions may be made by a “black box” algorithm with little explanation, making it harder to trace responsibility if something goes wrong.

- Bias and Ethical Considerations: AI systems can inadvertently learn biases from data and produce unfair outcomes. A human-in-the-loop approach adds a check for ethical issues – people can notice if an AI’s outputs seem biased or misaligned with company values and intervene or adjust the model. Without human oversight, an automated AI might reinforce biases unchecked, leading to discrimination or compliance violations. Human oversight is therefore an important tool for maintaining AI ethics and public trust.

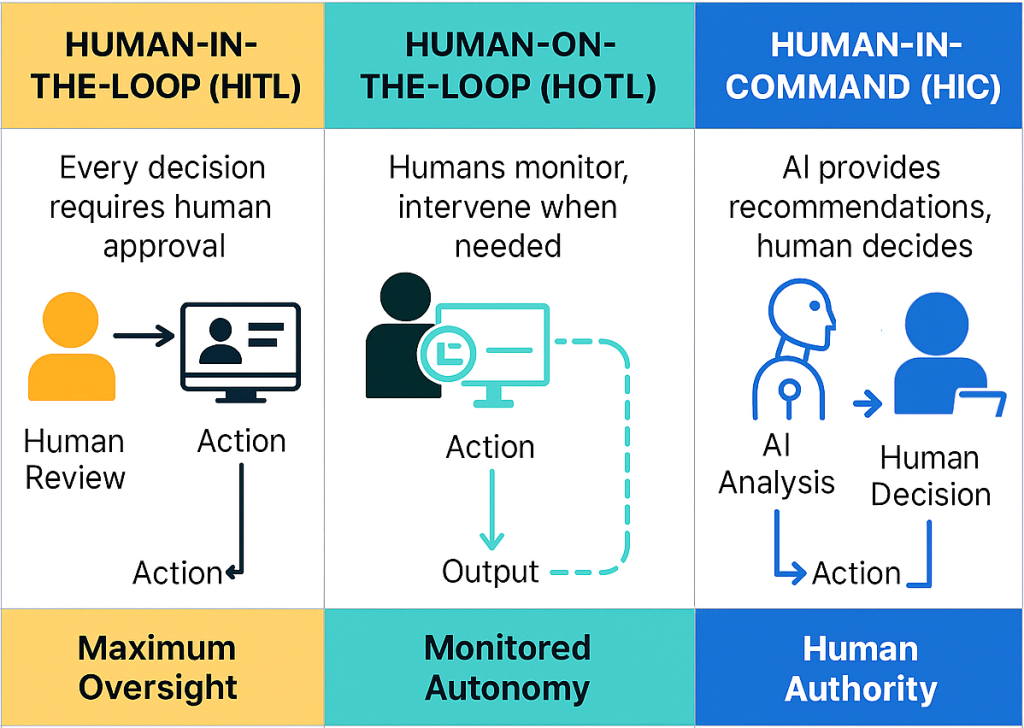

Comparison of human oversight models in AI systems, illustrating the differences between Human-in-the-Loop (HITL), Human-on-the-Loop (HOTL), and Human-in-Command (HIC) in terms of decision authority, autonomy, and operational control (Source: IBM Research)

AI Governance and Risk Management in Enterprise AI

The choice between human-in-the-loop and full automation is not just a technical matter – it’s fundamentally about governance and risk management. Companies must ensure their AI systems are effective and accountable. In fact, regulations are beginning to mandate human oversight for certain AI applications.

For example, the European Union’s AI Act (expected in 2024) requires that high-risk AI systems be designed to allow effective human oversight – echoing a principle already seen in laws like GDPR, which give individuals the right to avoid purely automated decisions in sensitive cases.

Beyond compliance, there are strong business reasons to maintain human oversight:

- Preventing Bias and Liability: AI models can produce biased or unfair outcomes that tarnish an organization’s reputation and lead to legal trouble. A biased AI decision that goes uncorrected might violate anti-discrimination laws, resulting in lawsuits or regulatory fines. Human oversight adds a checkpoint to catch such issues early.

- Maintaining Trust and Transparency: For AI to be embraced by employees, customers, and partners, its decisions need to be explainable and trustworthy. Human-in-the-loop processes help provide context and explanations for AI outputs, making it easier to justify decisions to stakeholders or auditors. In contrast, a fully automated “black box” system that cannot explain its reasoning can erode confidence among users and decision-makers.

- Safety in High-Stakes Scenarios: In fields like healthcare, finance, or transportation, a single AI mistake can have serious consequences. Having a human in the loop acts as a fail-safe. It’s no surprise that in high-stakes domains, organizations keep humans involved to double-check AI-driven actions for safety and accuracy. This oversight can prevent costly errors such as misdiagnoses, faulty financial trades, or unsafe machine behaviors.

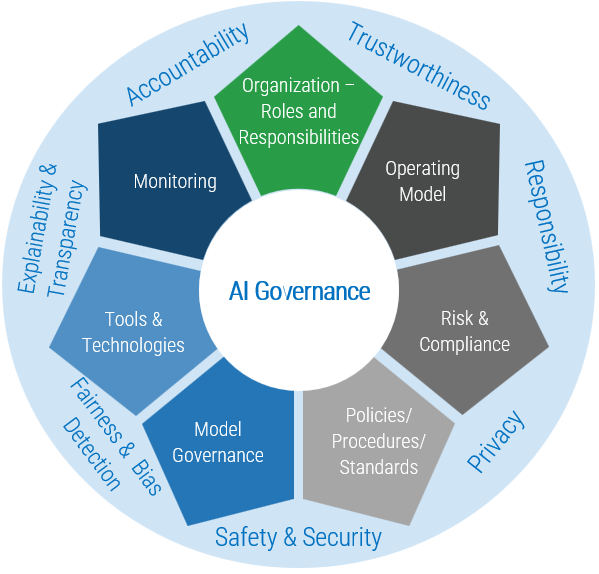

Core components of an enterprise AI governance framework, showing how accountability, trustworthiness, risk management, privacy, safety, transparency and bias detection are structured across organizational roles, operating models, policies, monitoring, and model governance (Source: Adapted from Deloitte)

From a governance perspective, companies are increasingly formalizing how they control AI use. Many executives see AI’s huge potential but also acknowledge gaps in oversight. One survey found 42% of corporate board directors see AI as a way to optimize operations, yet 32% worry their organizations lack the internal AI expertise to use it safely. This reflects a cautious approach: leadership recognizes the need for AI, but also the need for proper controls to manage its risks.

To enforce accountability, organizations are adopting AI governance frameworks that define clear oversight processes, validation protocols, and ethical guidelines for AI initiatives. Experts advise establishing these governance measures including data quality standards and human review checkpoints before deploying AI at scale. In practice, this could mean setting up an AI ethics committee, requiring human sign-off on certain AI decisions, and conducting regular audits of AI outcomes. Establishing these guardrails early on ensures AI systems remain aligned with the company’s values and risk appetite as they scale.

Another best practice is to start with human-in-the-loop implementations and gradually increase automation once trust is established. Many successful enterprises begin with AI that assists or augments human workers, rather than automating entire processes on day one. As the AI proves its accuracy and reliability, they then expand its autonomy. This phased approach lets the organization build confidence in the technology and refine the AI with human feedback before scaling it further.

By balancing innovation with control, companies can avoid both the extremes of reckless automation and over-reliance on manual processes. The goal is to let AI deliver business value improving efficiency, insights, and customer experiences without exposing the enterprise to undue risk. Achieving that goal means carefully deciding where humans must stay in the loop and where automation can be allowed to run on its own.

Conclusion

For organizations looking to leverage AI without compromising oversight, partnering with the right experts is key. Twendee specializes in implementing AI solutions with a human-in-the-loop philosophy and strong governance measures. In practice, we delivers controlled AI systems – ensuring that any automation is introduced responsibly, transparently and in alignment with your risk management needs.

By embedding human oversight and explainability into AI projects, Twendee’s approach prioritizes trust and compliance. This reduces operational risks (like costly errors or downtime) and legal risks (such as regulatory non-compliance or liability issues) for our clients. With our support, companies can confidently innovate using AI, knowing that proper checks and balances are in place.

If your organization is seeking the ideal balance between automation and human control in its AI initiatives, Twendee can help. We tailor our AI and software solutions to your business needs – accelerating your digital transformation while safeguarding against AI-related risks. Contact us today to explore how you can harness the power of AI with the governance and peace of mind your enterprise demands.

Contact us: Twitter & LinkedIn Page

Read latest blog: Why 2025 Will Be the Breakout Year for Telegram/TON Miniapps