AI continues to reshape customer-facing products and redefine what is possible in enterprise software. Yet amid the race to market, many AI teams overlook a foundational test of their tools: internal usage.

Internal AI adoption is more than a quality check. It is the most direct and revealing measure of whether an AI product is usable, trustworthy, and mature. If those building AI systems cannot rely on them in their own workflows, why should external users?

This article explores how internal AI usage provides an essential proving ground. Teams that apply AI internally gain a deeper understanding of its limitations, refine functionality through direct feedback, and build trust from the inside out.

Internal AI Adoption Reveals Real-World Friction Early

The concept of “eating your own dog food” remains essential in AI development. Products built for others but never used internally often suffer from misaligned features, unclear UX, and brittle performance under pressure.

Data from ESI ThoughtLab’s Driving ROI Through AI study, which surveyed 1,200 organizations, reported 20 times higher ROI (4.3% vs. 0.2%) and achieved payback on AI investments in 1.2 years versus 1.6 years for less mature firms. This ROI differential highlights a clear pattern: internal deployment is not just a best practice but a performance multiplier.

Real-world cases reinforce this insight.

- Companies like Atlassian and Notion actively trial their AI tools across internal teams before public launch. For example, Notion’s AI assistant was used internally to refine tone detection, task parsing, and versioning logic before shipping externally.

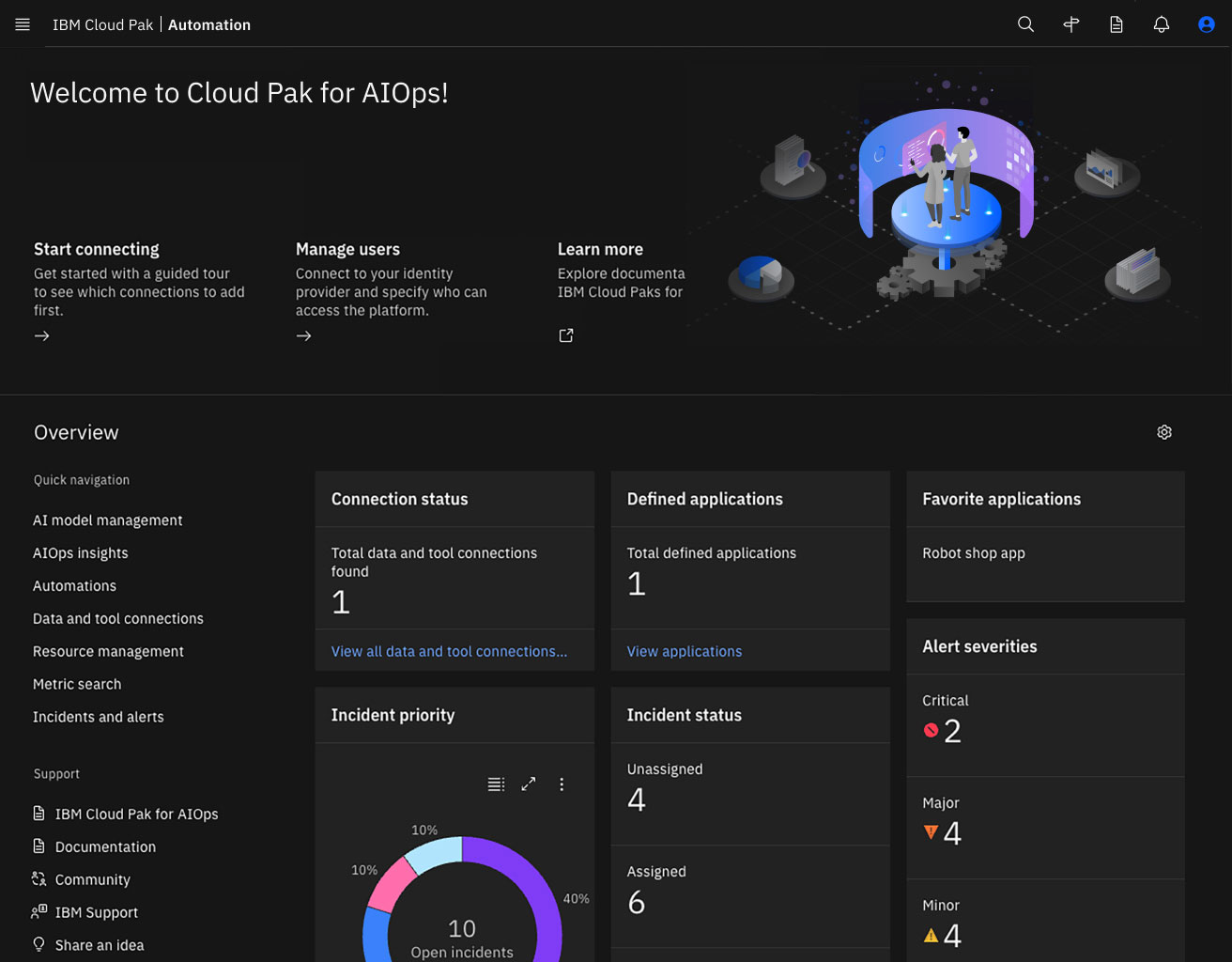

- IBM’s AI ops tools were piloted within their own DevOps teams. This surfaced alert fatigue problems and led to a confidence-scoring layer, which became a key feature in the public release.

IBM Cloud Pak AIOps dashboard showing incident status and alert severities. (Source: IBM)

These internal use cycles allow tighter feedback loops between builders and users, accelerating refinement and reducing the risk of feature-driven launches built on unvalidated assumptions.

Internal AI adoption is not just practical, it is statistically and strategically essential.

Relevant read: Top 10 Internal Automation Tools Saving Startups Millions in 2025

Why Internal Effectiveness Is the Prerequisite for External AI Trust

Before AI tools can deliver value to customers, they must first prove they work inside the organization that built them. This is not just about testing for bugs. It is about showing that the system integrates, adapts, and improves outcomes under real-world conditions starting with the team closest to the problem.

There are three reasons internal effectiveness must come first:

1. It forces better design under real conditions

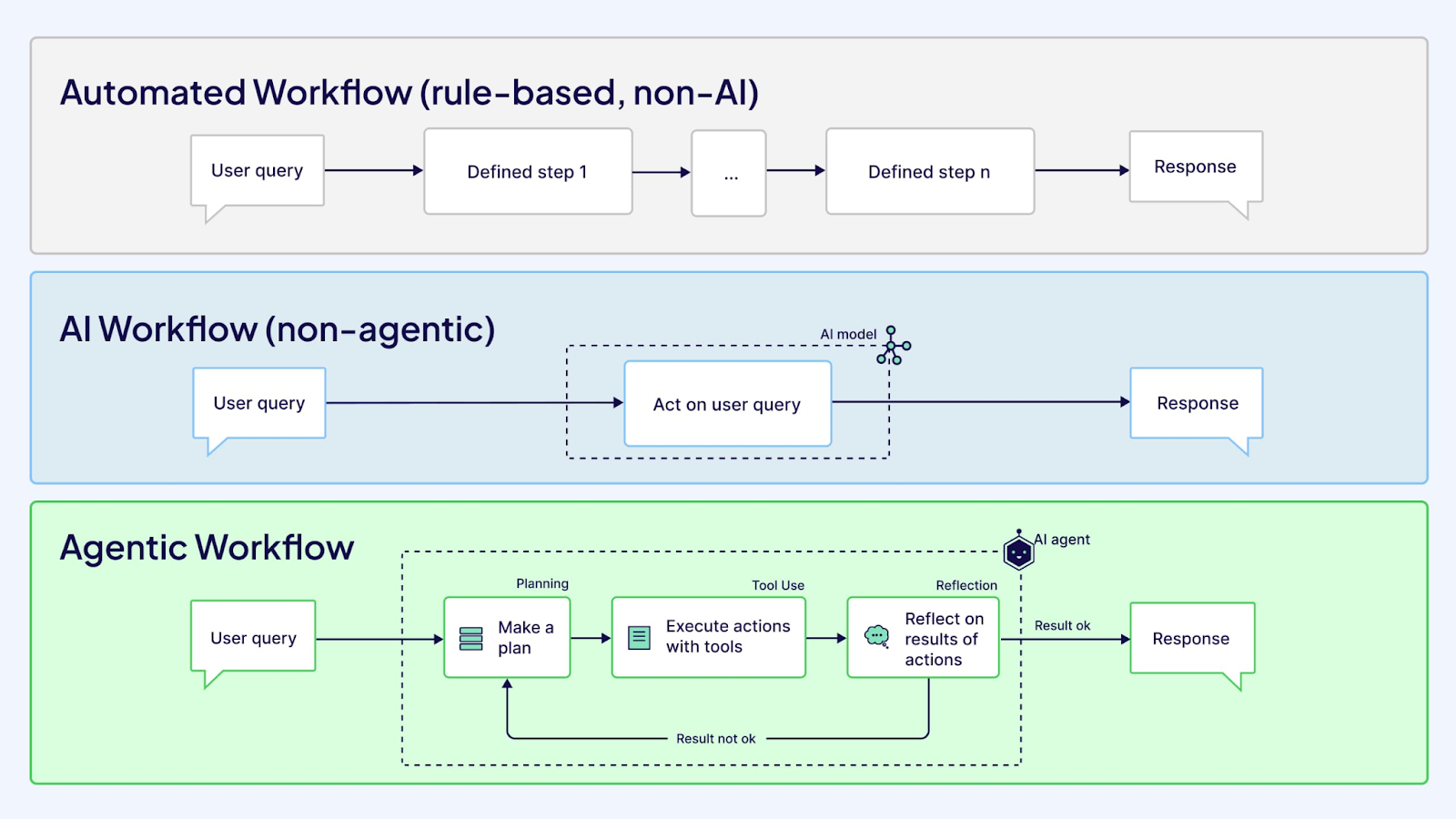

Internal workflows are messy, ambiguous, and constantly evolving far from the clean edge cases most external demos are built around. When AI tools are deployed internally, they are pushed into this complexity. This reveals flaws in logic, prompt brittleness, and where automation adds confusion rather than clarity. At Twendee Labs, early internal deployment of workflow agents exposed these pain points during sprint planning and QA cycles, which led to concrete improvements in usability, prioritization logic, and task interpretation.

These refinements would not have surfaced in hypothetical user stories or sandboxed trials. Internal friction forced better defaults, more robust behavior, and a product built around how people actually work.

Agentic workflows evolve beyond rule-based automation and single-turn AI. (Source: Weaviate)

2. It builds confidence and institutional knowledge

Teams that use their own AI systems gain far more than surface-level familiarity. They develop a working understanding of how the system behaves under pressure, what assumptions it relies on, and where it tends to fail. This lived experience becomes institutional knowledge distributed across engineering, product, and operations.

When internal users interact with the system daily, they:

- Learn how to interpret outputs in context, not isolation

- Know when to trust automation and when to intervene

- Discover unexpected edge cases early and adapt design accordingly

- Build the documentation and usage standards that external customers will rely on later

This depth of usage gives teams the confidence to support, explain, and improve the product without relying entirely on its creators. It also reduces handoff risks between development, customer success, and sales because the organization has already aligned around what the tool can and cannot do. Without this internal fluency, external adoption is built on hope rather than proof.

3. It reduces risk before scale

The cost of unproven AI is not just feature failure. It is client churn, broken trust, and lost momentum. By adopting AI internally first, companies catch high-impact issues before they reach customers when the stakes are lower and iteration is faster. This includes alert fatigue, logic conflicts, and performance instability under real workloads.

Platforms like IBM’s Cloud Pak for AIOps only reached enterprise readiness because internal DevOps teams used them in production. That exposure led to key features, such as confidence scoring to reduce alert noise. Without internal pressure, these flaws would have been caught later at much greater cost.

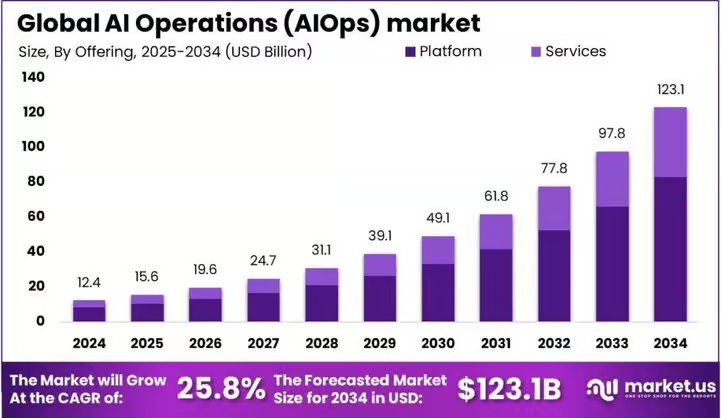

Projected growth of the AIOps market through 2034 highlights the urgency for tools to deliver real developer value before scaling. (Source: Market.us)

Building and deploying AI internally does more than improve functionality. It creates systems that can withstand real operational pressure, and earn trust through direct exposure to risk. Developers uncover gaps in logic. Product managers test alignment with real workflows. Ops teams probe resilience in unpredictable conditions. These roles do not rely on automation blindly. They challenge it, adjust it, and reshape it based on what actually happens in use. That is where meaningful trust is formed.

In contrast, AI products designed only for external rollout often prioritize performance in ideal scenarios. They are optimized for demos, not for messy deployment realities. Without internal use:

- Edge cases emerge late, often when resolution is costly

- Documentation remains shallow or disconnected from real tasks

- Internal teams lack shared understanding of what the system actually does

- Adoption depends more on marketing than on proven effectiveness

This is why technical performance is not the final measure of readiness. The real test is whether the teams who built the system trust it enough to use it every day.

Conclusion

In AI development, credibility is earned through use, not intention. Teams that adopt AI internally gain visibility into what works, what fails, and what needs refinement. That process builds durable systems and creates a foundation for external trust.

Internal AI adoption is not a delay, it is a strategic accelerator.

The strongest AI companies will not be those with the fastest demos, but those with the most resilient internal tools. Building for the outside world begins by solving for home. Explore how internal automation is driving smarter teams at twendeelabs.com, and follow Twendee on X and LinkedIn.