AI adoption in Web3 is everywhere in conversation, yet strangely absent in many operations. Companies pitch “AI-enhanced ecosystems,” whitepapers boast “autonomous smart contracts” and founders drop “generative AI” in every investor deck. But beneath the surface, how many are actually using AI to solve real business problems? The gap between aspiration and implementation is wider than many admit.

This article explores that disconnect and provides a grounded starting point for actually applying AI to cut costs, improve productivity and build scalable systems in blockchain and beyond.

The Hype Gap: Everyone Talks About AI, Few Actually Use It

In 2025, AI remains the most discussed tech theme in Web3. But are we confusing talking about AI with using it?

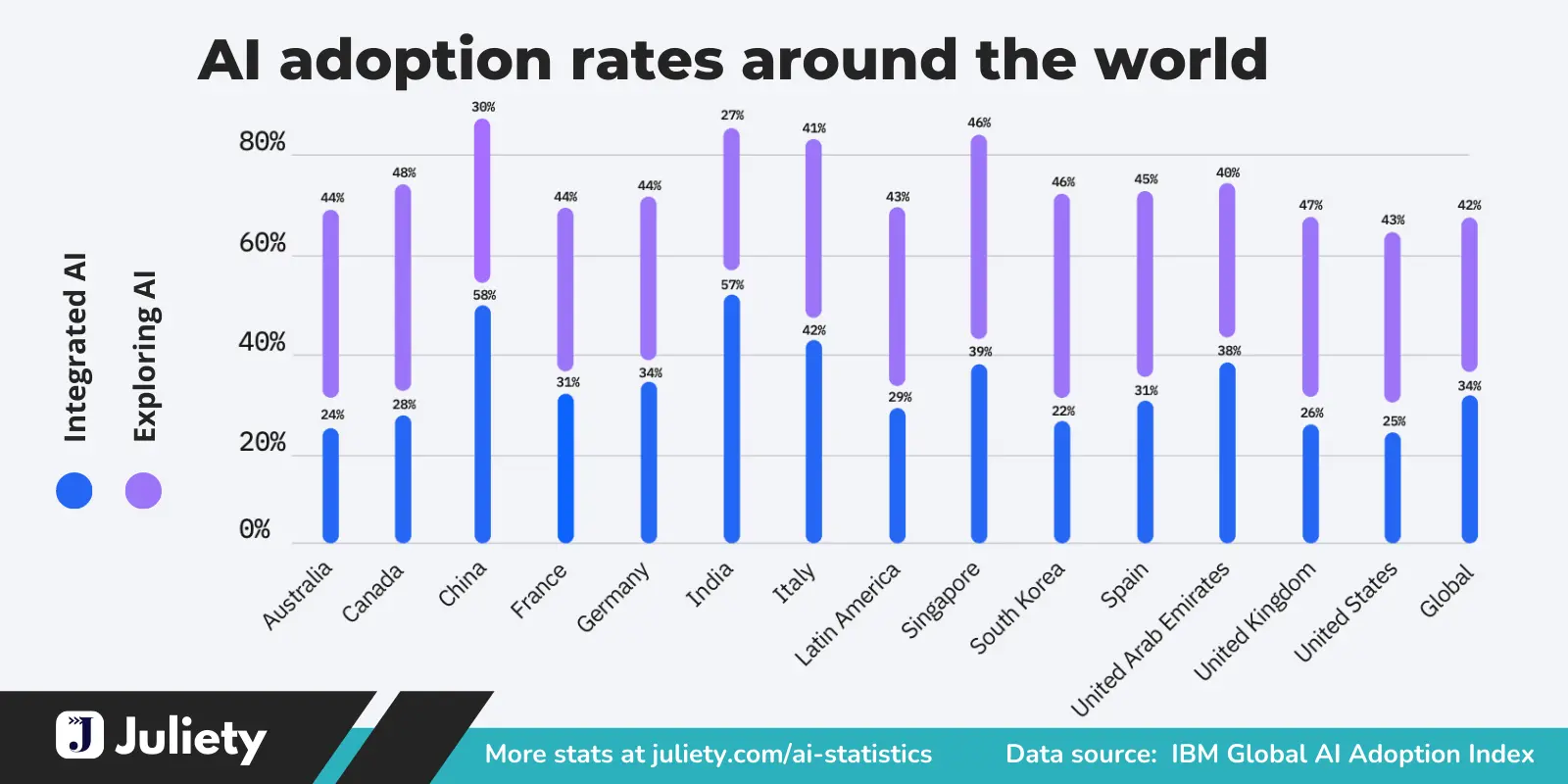

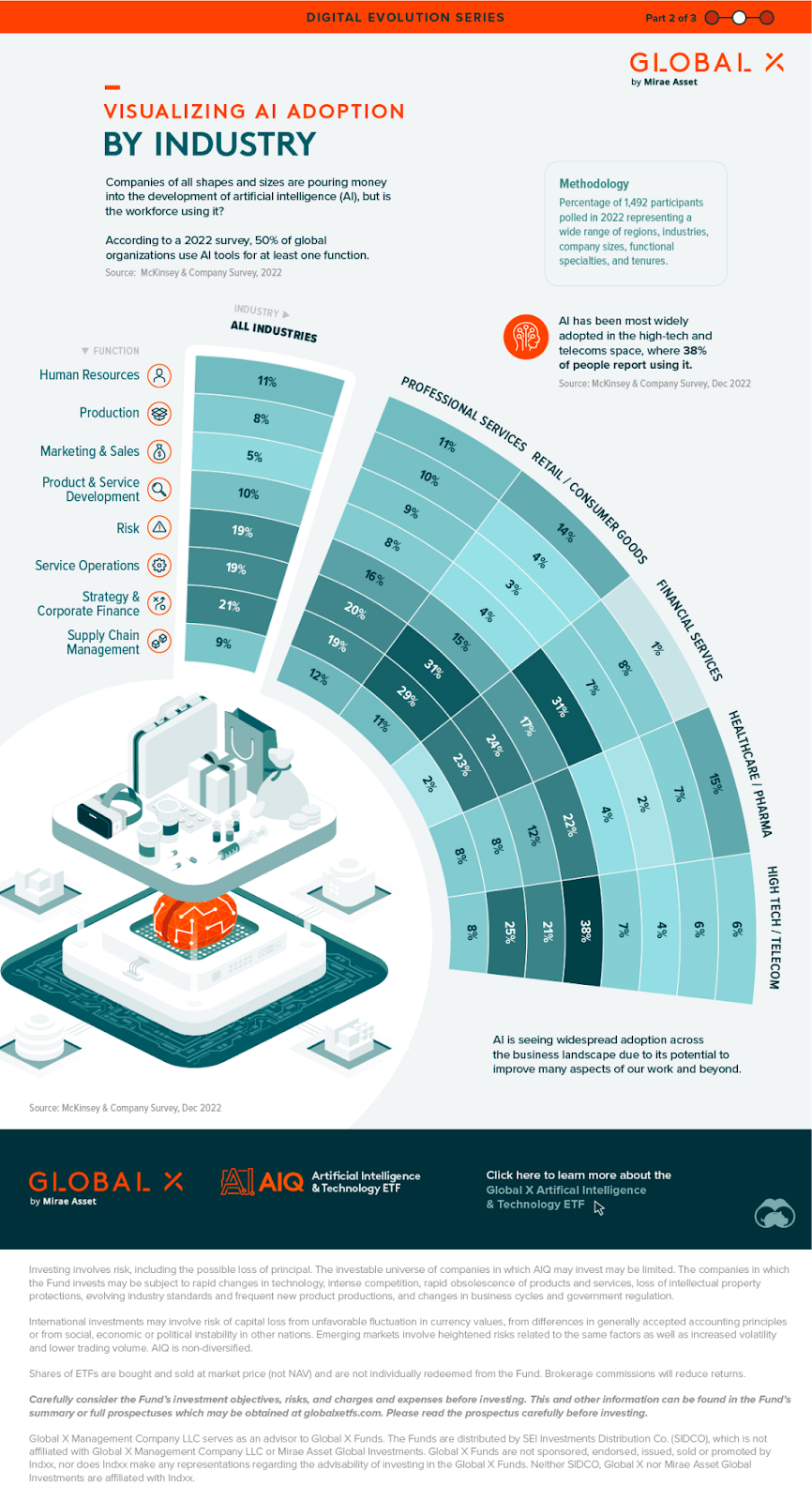

According to the U.S. Census Bureau, only 5.4% of American businesses used any AI tools in production by early 2024, despite AI dominating headlines. The McKinsey Global AI Report (2024) shows that even in tech-heavy sectors, fewer than 30% of firms deploy AI at scale and most use only one or two isolated applications.

Infographic showing AI adoption rates by country and sector, emphasizes growth trends (Source: Juliety)

Web3 paints a similar picture. Countless projects claim “AI integration,” yet the implementations often stop at:

- A basic chatbot on a dApp site

- A generic LLM API wrapped into an “AI agent”

- A roadmap slide promising AI-powered governance “soon.”

This is not meaningful AI adoption in Web3. It’s AI-washing, slapping on the label to attract attention, not deliver impact. Meanwhile, 73% of companies experimenting with AI report zero measurable ROI within the first year (Source: BCG x MIT AI Business Barometer 2024).

Global AI adoption rates by industry, highlighting that only ~50% of businesses use AI at all (Source: Visual Capitalist (Global AI adoption breakdown by sector))

Understanding the Barriers to Real AI Adoption

Several structural and operational factors contribute to the slow pace of adoption across Web3 startups and blockchain enterprises:

1. Misalignment Between AI Use and Business Goals.

Many organizations pursue AI initiatives without clearly identifying how they support measurable business objectives. This results in tools that function in isolation, generating insights or automation outputs, but failing to integrate meaningfully with user experience, governance, or monetization models.

2. Underinvestment in Data Infrastructure.

AI systems require clean, labeled, and connected data to function properly. Blockchain-based firms often deal with fragmented on-chain/off-chain data, lacking the unified data architecture necessary for training and inference. Without structured pipelines and data governance, AI becomes unreliable and difficult to scale.

3. Limited Technical and Operational Readiness.

Deploying AI at scale is not just a technical challenge, it involves cultural and organizational readiness. Many Web3 firms operate with lean teams and agile cycles, lacking AI-literate staff or MLOps processes to manage experiments, deployment, and model updates over time.

4. Cost and Complexity of LLM Infrastructure.

Large language models (LLMs) like GPT-4 or Claude offer immense power but come with significant operational costs. Without LLM cost management, startups quickly face unsustainable API bills or performance bottlenecks.

Even simple use cases, such as AI customer support, can incur unexpected costs if prompt length, frequency or caching are not optimized.

5. Lack of Governance and Risk Frameworks

AI systems, particularly generative ones, introduce new risks, including hallucinated content, biased recommendations, and unexplainable decisions. The absence of AI prompt governance and oversight mechanisms leads to inconsistent outputs and reputational risks, particularly in high-trust environments like DeFi or crypto investment platforms.

From Buzz to Business: How to Start Applying AI Smartly

Despite these challenges, a growing number of organizations are moving beyond experimentation and implementing AI solutions that enhance operational performance, reduce costs, and increase scalability.

Step 1: Identify High-Leverage, Measurable Use Cases

Begin by auditing workflows that are:

- Repetitive, rules-based, or data-heavy

- Frequently performed manually by engineers or support teams

- Pain points in terms of speed, accuracy, or cost.

Examples in Web3 environments include: On-chain anomaly detection using ML to flag irregular wallet behavior, AI-generated reporting for DAO or treasury performance, Transaction support chatbots trained on protocol documentation, Auto-tagging wallet addresses by behavior clusters for targeted airdrops or community segmentation.

Case Insight: One L2 blockchain protocol integrated AI-driven wallet classification and reduced fraudulent faucet claims by 34% within three months.

Step 2: Prioritize Internal Automation Before User-Facing Features

While AI-enhanced user tools (like code assistants or AI DAOs) attract attention, the fastest ROI is often found in internal ops:

- Automating test generation and documentation for dev teams;

- Summarizing DAO proposal discussions;

- Forecasting protocol performance based on on-chain metrics.

McKinsey (2024) reports that AI-enabled internal automation delivers a 20–45% improvement in engineering throughput when deployed within dev pipelines.

Step 3: Control Costs with Model Selection and Prompt Design

Avoid overuse of large models where smaller ones suffice. Apply techniques like:

- Prompt optimization and truncation;

- Response capping (token length);

- Routing simple queries to cached or rule-based flows;

- Training domain-specific lightweight models or embeddings.

This approach ensures AI scalability without incurring unsustainable costs, a key concern in crypto startups managing burn rate.

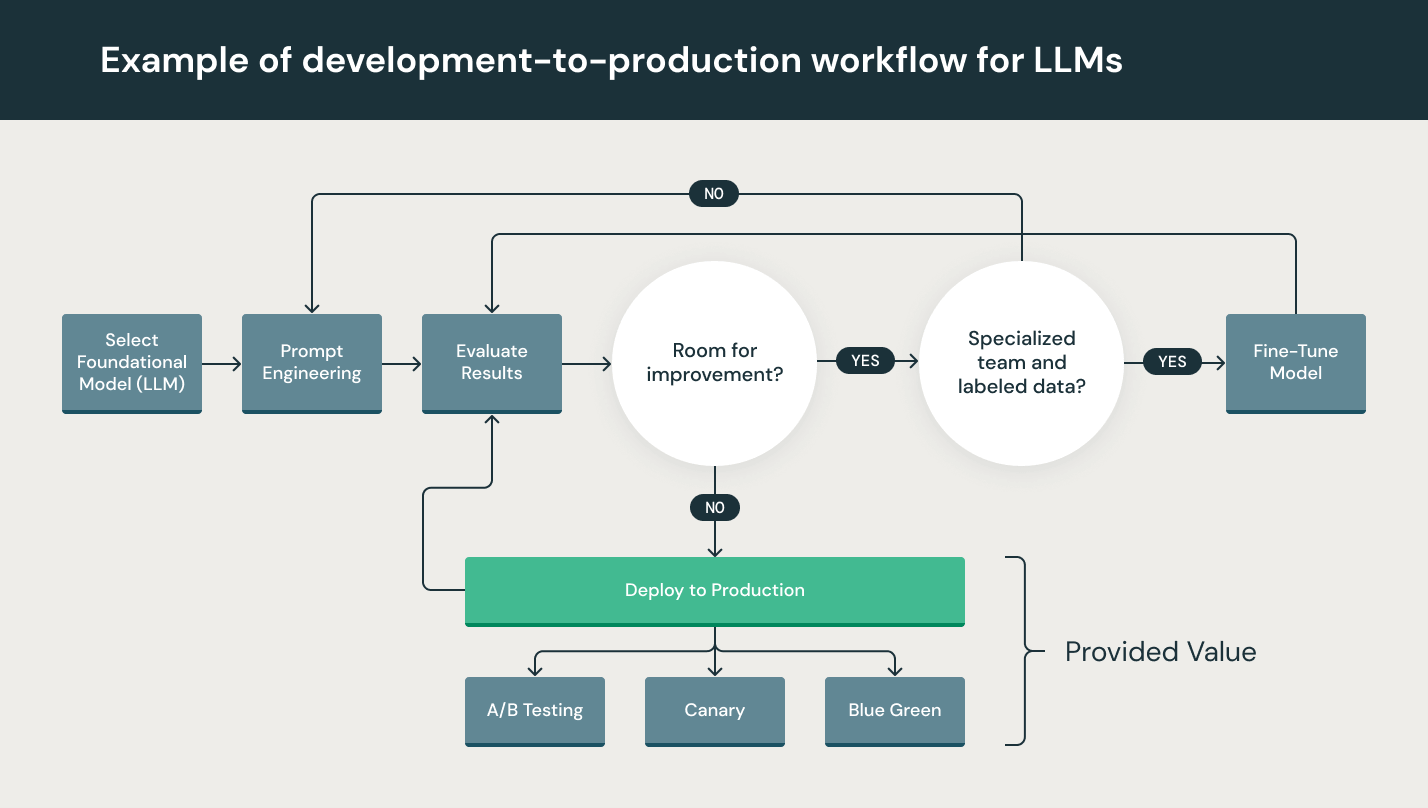

Step 4: Establish Prompt Governance and Human-in-the-Loop (HITL) Practices

Deploy structured prompt libraries and monitor interactions for:

- Consistency across tone and content;

- Performance under varied inputs;

- Compliance with legal and reputational standards.

Stanford AI Index (2024) notes that 79% of organizations adopting generative AI report quality degradation or hallucination issues when prompt governance is absent. Embedding AI alongside human oversight allows startups to refine outputs continuously while building trust with users and regulators.

Development‑to‑production workflow for LLMs, illustrating stages: prompt engineering, evaluation, fine‑tuning, deployment and monitoring (Source: The Digital Insider)

Step 5: Track Impact and Adjust

For every AI deployment, define clear KPIs:

- Time saved per workflow;

- Cost reduced per interaction;

- Accuracy or precision gains;

- User satisfaction deltas post-deployment.

AI should not remain a proof of concept. Each integration must be assessed like any other product or feature, with ROI measured, compared and iterated.

Conclusion

AI is no longer a future technology, it is today’s infrastructure challenge. For Web3 companies, the path forward lies in moving beyond rhetoric toward targeted, strategic deployments that create tangible value.

Organizations that approach AI adoption in Web3 with clarity, discipline, and measurable goals will build more resilient systems, scale faster, and avoid costly dead-ends. Those who rely on AI for optics, without proper planning or governance, risk wasting resources, eroding trust and falling behind.

At Twendee, we help blockchain-native companies move from AI aspiration to AI execution. Our services include:

- Scalable AI workflow design

- On-chain data intelligence integration

- Model tuning and deployment optimization

- AI risk assessment and governance planning.

▶ Explore our recent advisory:

How to Offer Crypto Services Globally Without Breaking Local LawsOr follow us for more insights: Twendee on X (Twitter)Twendee Labs LinkedIn