Web3 is moving from hype to a tangible reality in 2025. Decentralized applications (dApps) are gaining mainstream adoption, and their success hinges on robust, scalable Web3 cloud architecture. In this context, “cloud architecture” spans everything from traditional cloud services to cutting-edge decentralized infrastructure. The goal is to balance decentralization, scalability and security in a cost-effective way.

This article explores the best cloud architecture models for Web3 platforms in 2025, comparing hybrid, decentralized, serverless and modular blockchain architectures and recommends which models fit specific use cases like DeFi, NFTs, DAO and Layer 2 networks. We’ll also highlight recent (2024–2025) developments and platforms (e.g., IPFS, Filecoin, Chainstack, Akash, AWS’s Web3 services) that exemplify these architectures.

By the end, you’ll understand how to strategically architect Web3 solutions for success and why a thoughtful cloud strategy is as important as the blockchain protocol itself. Let’s dive in.

Hybrid Cloud Integration for Web3

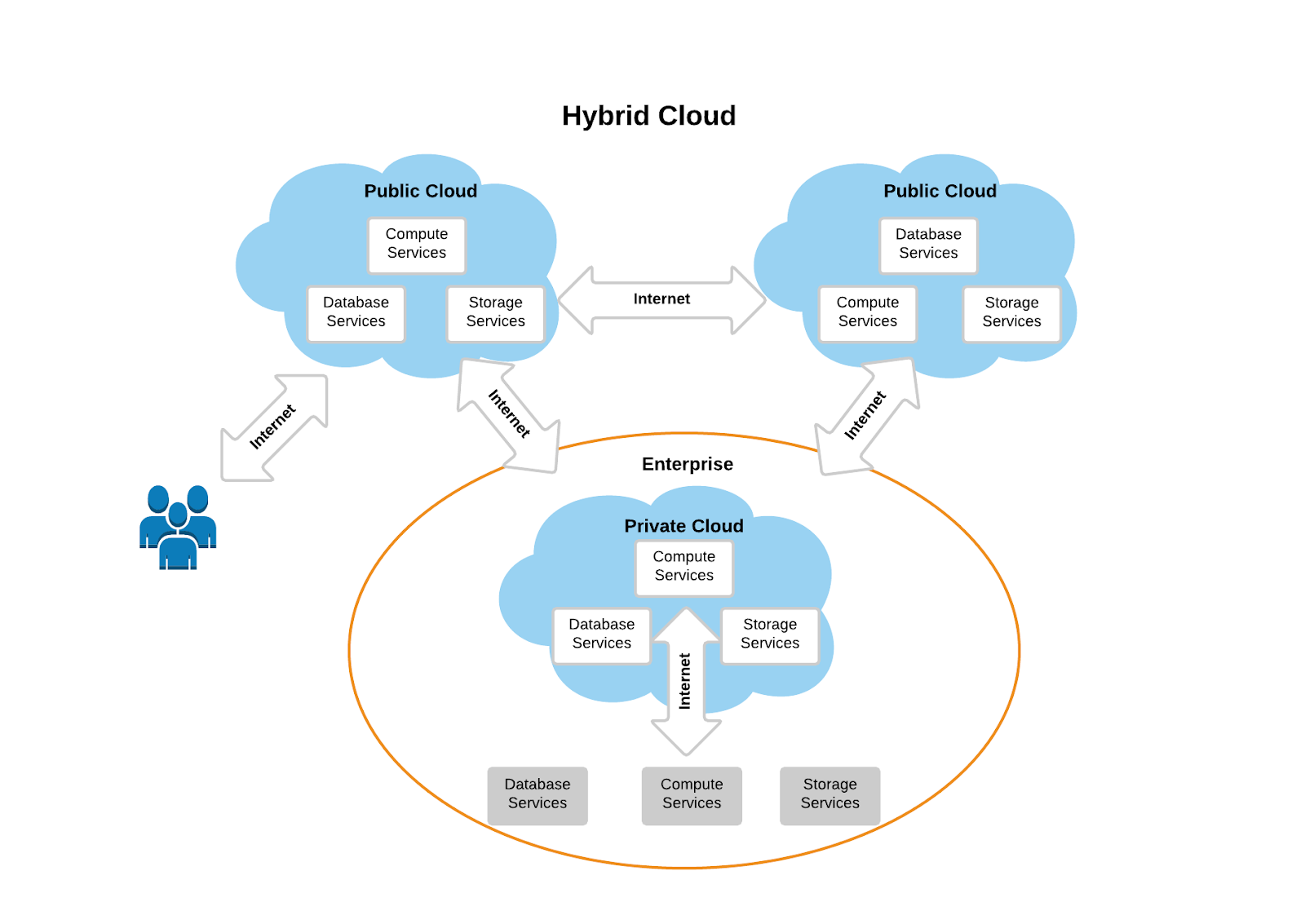

One prevalent model is the hybrid cloud approach, which combines decentralized blockchain components with traditional cloud services. While Web3 emphasizes decentralization, in practice many dApps still rely on centralized infrastructure for certain layers. A hybrid architecture acknowledges this reality, blending the trustlessness of blockchain with the performance and convenience of cloud.

In a hybrid Web3 stack, core transactions and smart contracts execute on decentralized networks, but supporting services run in the cloud for efficiency. For example, a dApp’s smart contracts might live on Ethereum or another blockchain, while its user interface is served via AWS or another cloud CDN for fast global access. Non-critical or large data (images, logs) can be stored off-chain – either on a decentralized storage network or a traditional cloud database, depending on requirements. This hybrid infrastructure lets developers optimize for performance, cost, and compliance without sacrificing blockchain’s integrity:

- Performance: User-facing components (web front-ends, APIs) hosted on high-speed cloud servers ensure low-latency interactions, while the blockchain back-end handles security-critical logic. Many Web3 projects host their front-ends on services like Netlify or AWS, which offer reliability and scaling, whereas on-chain operations handle consensus and settlement.

- Cost and Scalability: Cloud services allow on-demand scaling for parts of the application that see variable load (e.g. a surge in users on a DeFi dashboard), without overburdening the blockchain. This cloud scalability in Web3 is crucial for good UX during traffic spikes.

- Compliance and Privacy: Enterprises or regulated applications can keep sensitive data on private clouds or databases, while still anchoring key hashes or transactions to a public blockchain. This “hybrid blockchain” design – akin to hybrid cloud, gives the best of both worlds, letting organizations use blockchain as a tamper-proof layer without exposing all data publicly.

Many real-world Web3 architectures in 2025 are effectively hybrid. “Web3 applications require infrastructure to run, including blockchain nodes, front-end apps, and storage. While blockchains process transactions, dApp front-ends still need hosting solutions like AWS, IPFS, or decentralized cloud providers like Akash Network and Filecoin”. In other words, Web3 doesn’t replace cloud computing, it augments it. Cloud providers have noticed: leading clouds now offer Blockchain-as-a-Service (BaaS) tooling to integrate with this hybrid model. These services let developers spin up nodes or consortium networks easily, bridging cloud and blockchain.

Hybrid cloud architecture combines public cloud compute (e.g., AWS/GCP) with private cloud or decentralized storage, facilitating scalable Web3 infrastructure with built-in redundancy (Source: circuitdiagramkoto)

When to use Hybrid: Most dApps and platforms that need both decentralization and Web2-grade performance will benefit from a hybrid architecture. If you’re building a consumer-facing Web3 app (DeFi exchange, NFT marketplace, gaming dApp) where smooth UX is critical, a hybrid model allows you to use the blockchain where it matters (transaction integrity, digital ownership) and cloud where it shines (speed, big data handling). Hybrid setups are also common for enterprise blockchain deployments.

Best-Fit Architectures for Key Web3 Use Cases

Now that we’ve covered the major architecture models individually, it’s important to recognize that the “best” cloud architecture for Web3 is context-dependent. A solution that works for one project might not be ideal for another. Here we match each model (or combination thereof) to specific Web3 platform scenarios:

DeFi Platforms and Decentralized Exchanges (DEXs)

DeFi applications (decentralized finance) include DEXs, lending platforms, derivatives protocols, etc., which often require handling high volume transactions and real-time data. For these, scalability and security are paramount (nobody will use a DEX that’s slow or insecure). The recommended architecture for modern DeFi is a mix of modular and hybrid approaches:

- Layer 2 / Modular for Scaling: Almost all serious DeFi platforms in 2025 either live on a high-performance chain or use Layer 2 networks for scalability. A DEX can deploy smart contracts on an Optimistic or ZK-rollup to get much higher throughput than on Ethereum mainnet, while still benefiting from Ethereum’s security. For example, an AMM (automated market maker) might process trades on Arbitrum or zkSync (execution layer) and rely on Ethereum L1 as the settlement layer for finality. This modular design allows the DeFi app to serve many users with low fees. As noted, rollups can boost throughput by 100×+ without sacrificing security guarantees, which is ideal for exchanges handling continuous trades.

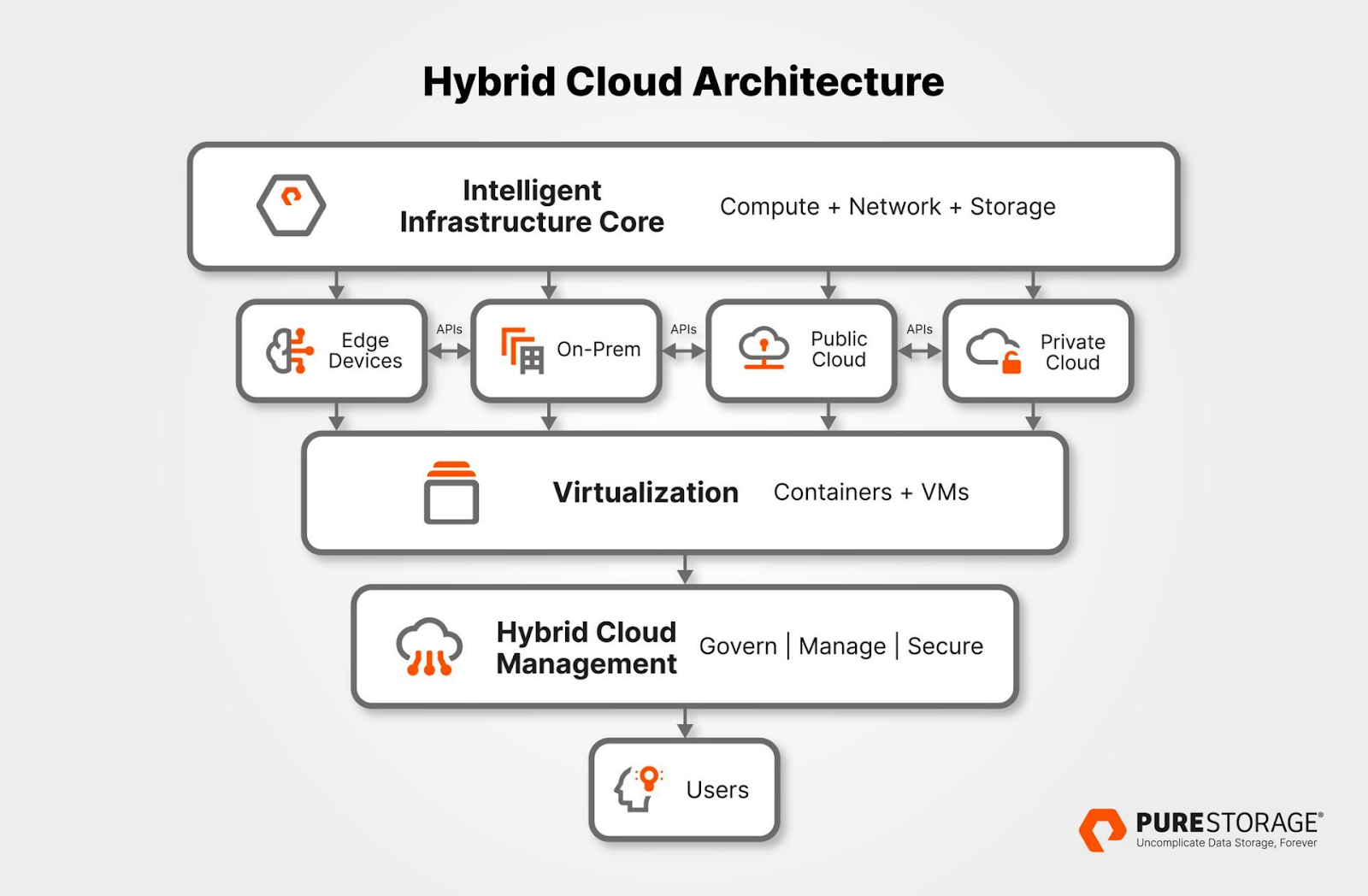

- Hybrid Cloud Elements: While the on-chain transactions might be on L2, supporting infrastructure for a DEX benefits from hybrid cloud. Order book-based exchanges (like dYdX v3) historically ran an off-chain order book server in the cloud to complement on-chain settlement. Even AMMs use cloud services for things like price oracles (Chainlink nodes themselves often run on cloud), analytics dashboards, and frontend hosting. To ensure reliability, a DeFi platform should use multiple cloud regions or providers (multi-cloud) for its off-chain components, avoiding a single point of failure. (We saw a cautionary tale in 2021 when the dYdX exchange went down during an AWS outage, since critical parts of its stack were tied to one region) Today, a DEX would architect for redundancy: e.g., deploy backend services across AWS and Azure, or use a decentralized cloud for failover. The core trading engine might remain decentralized (if possible), but things like web servers, notification services, caching layers can run serverlessly or on traditional cloud for speed.

A hybrid setup combining cloud compute (e.g., AWS) with decentralized storage and gateways (Source: purestorage)

Summary for DeFi: Use modular blockchain scaling (L2s, subnets, or app-chains) to handle transaction load and keep fees low. Complement this with a hybrid cloud setup for ancillary services – ensuring even if one cloud goes down, the platform stays online (consider decentralized infrastructure for critical pieces like data feeds or fallbacks). Given the value at stake, security is non-negotiable: any cloud component should be carefully security-hardened, and the blockchain layer should ideally inherit security from a battle-tested Layer1 (rather than an unproven new chain).

NFT Marketplaces and Digital Collectibles

NFT marketplaces (for art, collectibles, gaming items, etc.) have exploded in popularity, and their needs are somewhat distinct. They deal with media files (images, videos) associated with tokens and often see traffic spikes during popular drops or sales. For NFTs, decentralized storage and content delivery are critical architecture considerations, alongside scalable web services for the marketplace functionality.

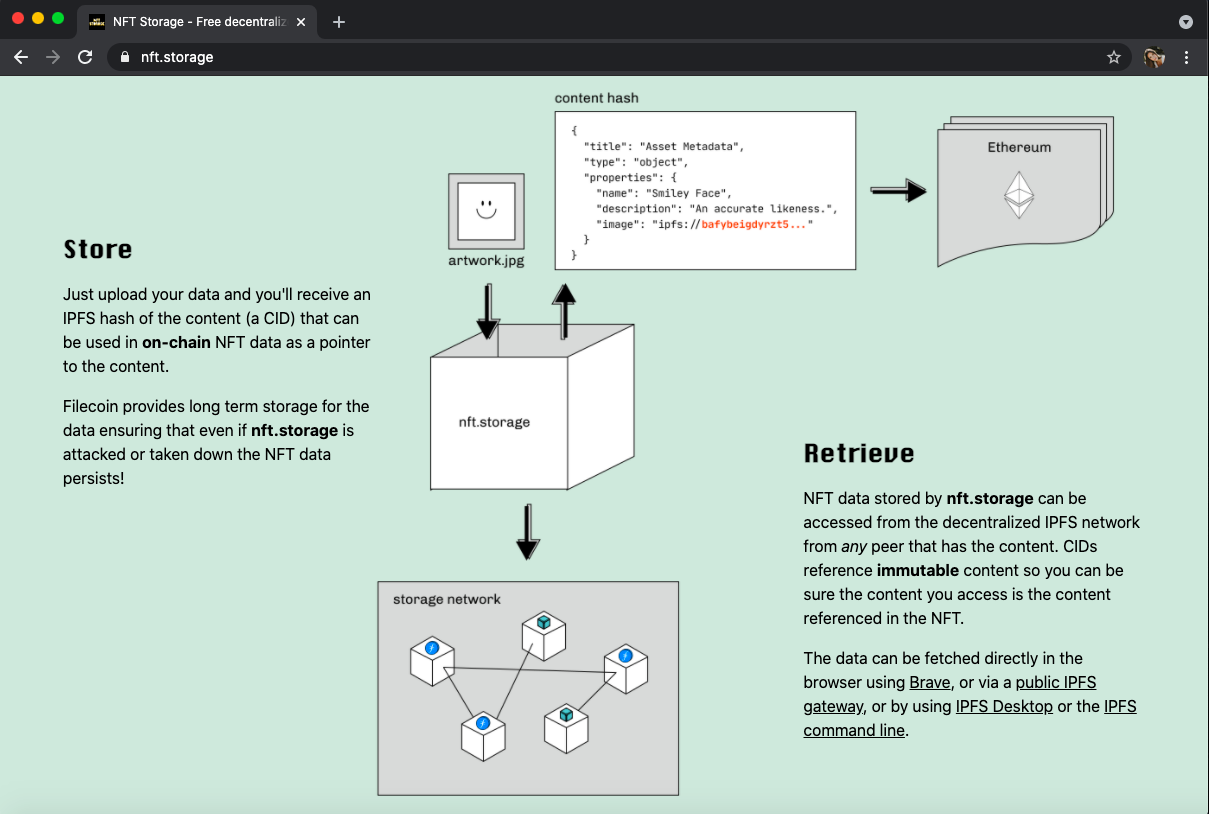

- Decentralized Storage for Assets: It is widely considered a best practice now that NFT content (the actual image or metadata JSON) be stored on a decentralized storage network like IPFS/Filecoin or Arweave, rather than a centralized server. This ensures the NFT you bought doesn’t vanish or get altered if a company’s server goes offline. Marketplaces should integrate with these storage networks natively: e.g., when a user uploads an artwork to mint, the file is pinned to IPFS and a Filecoin deal is made to persist it. This way, each NFT token’s metadata URI can be a content-addressed link (ipfs://CID or arweave://) that is resilient. The CloneX incident (noted earlier) is a perfect example of why – relying on a single CDN can make an NFT “disappear” overnight due to a service issue. By 2025, users are savvy and often demand that NFTs be hosted on decentralized storage; marketplaces that do this build trust.

- Scalable Backend & Search: An NFT marketplace’s backend involves listing items, searching/filtering, user profiles, bidding logic, etc. These functions resemble Web2 e-commerce in many ways and benefit from a serverless or cloud microservices approach. For instance, search functionality might use a cloud database or an indexed cache of blockchain data to allow fast queries (since querying the blockchain directly for “all NFTs by X creator” is slow). Here a hybrid model applies: the core truth (who owns what NFT) is on-chain (maybe on an Ethereum L1 or L2), but the marketplace will maintain an off-chain index for snappy UX. This index can be maintained by listening to blockchain events (using a serverless function or a background worker) and updating a database. The use of cloud databases and APIs can drastically improve performance for end-users (e.g., loading a collection page quickly), while the blockchain remains the source of truth for transactions.

- Content Delivery and Caching: Images and media for NFTs can be large, and decentralized networks, while reliable, can be slower to fetch from than a traditional CDN. Many NFT platforms use hybrid approaches like caching popular content on a CDN or using IPFS gateways. Another innovative approach is using peer-to-peer content delivery in browsers (some NFT sites use IPFS via the browser, or leverage protocols like Livepeer for decentralized video streaming). Still, to ensure smooth loading worldwide, a combination of decentralized storage with a smart caching layer (possibly a cloud-based CDN or distributed edge network) is useful.

- Serverless for Event Triggers: NFT drops often have specific timing logic (start auction at X time, close bidding at Y, distribute rewards at Z). These can be orchestrated with serverless functions or cloud cron jobs that call smart contracts at the right times. It saves the team from running dedicated cron servers and scales if many events run concurrently (think of a marketplace scheduling thousands of auctions).

Summary for NFT Marketplaces: Use a decentralized cloud storage model for the NFT assets themselves – this is non-negotiable for long-term trust. Pair that with a scalable cloud backend (could be serverless or a robust cloud cluster) for the marketplace functionality – things like search, filtering, user accounts, which Web3 tech alone might not handle efficiently. A hybrid front-end (e.g., a decentralized front-end that’s also accelerated by traditional CDNs) can ensure both censorship-resistance and performance. By marrying decentralized storage with cloud computing for logic, NFT platforms can achieve both the integrity that creators and collectors expect and the usability that broader audiences need.

NFT content stored on IPFS/Filecoin via decentralized nodes (Source: filecoin.io)

Decentralized Autonomous Organizations (DAOs) and Community Platforms

DAOs are member-run organizations that operate via smart contracts and voting, often coordinating people and funds around a shared goal. A DAO’s needs often revolve around governance, transparency, and community coordination tools. While the governance (voting, treasury) is on-chain (often using governance tokens and proposals on-chain), the DAO’s day-to-day operations might use a mix of Web3 and Web2 tools. The ideal architecture for a serious DAO is one that upholds the DAO’s decentralized ethos while still providing reliable communication and coordination infrastructure:

- Decentralized Collaboration Tools: Many DAOs use platforms like Discord or Discourse for off-chain discussions, which are centralized. However, in 2025, there’s a push towards more decentralized or self-hosted community platforms. A DAO might choose to host its proposal discussions on a decentralized web app that stores data on IPFS and uses Ethereum for identity (sign-in with Ethereum). This ensures the community’s discourse isn’t subject to deletion by a central server admin. From an architecture view, this may involve using decentralized identity (DID) systems for login, storing discussion threads on decentralized storage, and perhaps using a peer-to-peer network for real-time chat. Some of these components are nascent, so a hybrid approach is practical: e.g., run a self-hosted forum on a cloud VM for now but take regular snapshots to Arweave for permanence, or use a serverless function to archive key DAO decisions to the blockchain for posterity.

- Governance and Voting dApps: The core of DAO governance is typically a voting smart contract (like Compound’s Governor or Snapshot (which is off-chain voting with on-chain state)). These need front-end interfaces. A common architecture is to have a client-side dApp (often just a static site or app) that connects directly to the blockchain or to a service like Snapshot’s API. This front-end could be hosted decentralized (on IPFS) to ensure that even if the core team disappears, token holders can still access the interface to vote. In parallel, many DAOs maintain a cloud-hosted backend for convenience features: for example, an API to query all past proposals with rich data (because doing that from scratch on-chain can be slow). That API might run on a cloud server or be an indexer service. Using something like The Graph (decentralized indexing) is popular – The Graph’s nodes, however, often run in cloud environments but the network is decentralized in oversight.

- Treasury Management & Security: DAOs often interact with multi-sigs (e.g., Gnosis Safe) and need to track treasury balances, investments, etc. A backend service that monitors the treasury wallet(s) and provides alerts or analysis can be very helpful. This can be implemented as a serverless function that wakes up on certain events (incoming funds, low balance, etc.) and posts notifications to the DAO’s communication channels. Security-wise, sensitive operations (like executing a proposal to spend funds) are on-chain and require multiple sign-offs by design; but any off-chain infra that has keys (like a bot posting on behalf of the DAO) should be minimal and carefully secured.

- Content and Transparency: DAOs often publish documents, roadmaps, even real-time financial reports. Using decentralized storage to publish these (via IPFS for documents, maybe Arweave for permanent archives of each version) can ensure members and outsiders can verify records haven’t been tampered with. Imagine a DAO that publishes its meeting notes to IPFS – an architecture could involve a cloud function that when triggered (maybe by a new commit on GitHub or a form submission) takes a document, pins it to IPFS, and returns the content hash which is then posted on-chain or on the DAO website. This way, all important info has an immutable reference.

- Example: A large-scale Investment DAO might have the following: governance token voting on Ethereum L1 (for maximum security). They deploy a front-end interface for proposals on IPFS (so anyone can access it censorship-free). The discussion forum is initially on Discourse (cloud) but all proposal texts are also automatically saved to an Arweave permaweb application via an API. The DAO’s treasury movements are monitored by a script (running on a cloud VM or as a cron Lambda) that logs every transaction to a Google Sheet for easy reading – but that sheet is also regularly backed up to IPFS. The DAO uses a mix of on-chain and off-chain – but everything off-chain is either non-critical or backed up to a decentralized network to ensure transparency. For day-to-day convenience, the community might still rely on some Web2 tools (it’s hard to get around that entirely in 2025), but the architecture ensures that the core records and decision-making process live on decentralized rails.

Summary for DAOs: A DAO should lean towards decentralization where it matters: governance mechanisms on-chain, data and records stored or at least archived on decentralized networks, and community platforms that don’t undermine the censorship-resistance of the group. However, practical use means leveraging cloud tools: a hybrid architecture is often the reality (with maybe a long-term goal to phase into more decentralized solutions as they become user-friendly). Key recommendations are to avoid single points of failure – e.g., don’t have your only voting interface running on one AWS server; either host redundantly or use IPFS. Use cloud for scalability (like indexing and notifications), but open-source and document everything so others could set up mirrors. The cloud architecture for a DAO might involve multiple microservices (some serverless functions for bots and alerts, a small database for off-chain member profiles, etc.), all of which can be replaced or forked by the community if needed – this ensures the DAO is not reliant on any one person’s AWS account for survival.

Layer 2 and Blockchain Infrastructure Projects

Finally, for teams building Layer 2 networks, sidechains, or other blockchain infrastructure (like interoperability protocols, node networks, etc.), the considerations are a bit different because your product is the network. Here, modular blockchain architecture is usually the go-to choice, and cloud deployment often comes into play in bootstrapping and operating the network in its early stages.

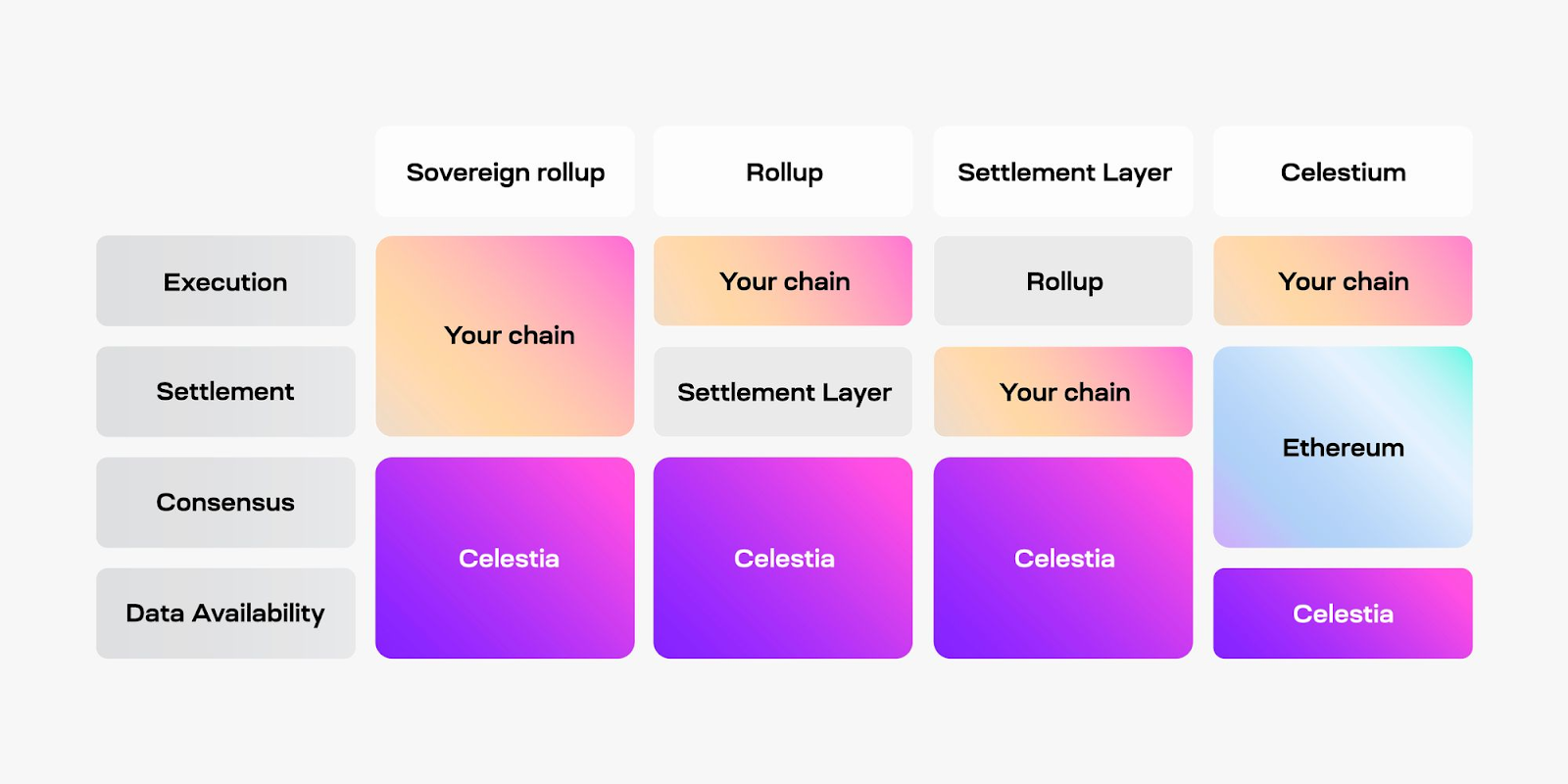

- Modular Design: As discussed, any new L1 or L2 in 2025 is likely to choose a modular path to remain competitive. For a Layer 2 specifically (like an Optimistic or ZK rollup), the architecture by definition splits execution (on the rollup nodes) from consensus and settlement (on Ethereum or another L1). If you’re building such a platform, you will design it so that the chain’s security comes from an existing robust network, allowing you to focus on execution performance. Similarly, new L1s might choose to launch as part of ecosystems like Cosmos or Polkadot to leverage shared security or at least easy interoperability.

- Cloud for Nodes and Orchestration: In early phases, many blockchain networks rely on cloud hosting for some of their critical nodes or infrastructure – even if ultimately they aim for decentralization. For example, the team behind a rollup might run the sequencer (the node that orders transactions) on a secure cloud instance or cluster to ensure high availability and low latency. Many Ethereum L2s started with a centralized sequencer run by the core team on cloud infrastructure. While not decentralized, this helped get the network off the ground. Over time, plans are usually to decentralize the sequencer or prover by allowing multiple parties or using consensus. But from an architecture perspective, the initial setup often looks like a hybrid: the blockchain nodes themselves might be geographically distributed but possibly in cloud data centers (for consistency and performance), with monitoring and devops handled via cloud tools.

- Supporting Services: A Layer 2 network needs a block explorer, monitoring, and integration with wallets/dApps. These are typically cloud-based services. For instance, block explorers (Etherscan-like services) use indexed databases to present chain data nicely – usually a centralized service (though some decentralized alternatives like Aleph or The Graph can be employed for data querying). Monitoring systems (to alert if nodes go down or if there’s a problem with block production) are often hosted in the cloud. The RPC endpoints for developers might be provided by companies (Infura, Alchemy, etc.) which run nodes on cloud hardware to serve requests at scale. All these mean even a “decentralized” new network has a lot of cloud in the mix for practicality. The key is designing the network to not depend on any single one of these services for consensus or security, so that if one fails, the network as a whole continues (it might be less convenient, but not dead).

- Interoperability and Bridges: If you’re building, say, a new Layer 2 or sidechain, you’ll also likely provide a bridge to the Layer 1 or other chains. Bridges often have off-chain components (like a server watching Chain A and then submitting transactions to Chain B). Those off-chain relays often run on cloud servers by design (though in some cases, multiple independent relayers run, which is somewhat decentralized but often still on their respective cloud setups). The trend is towards making bridging trust-minimized with smart contracts on both sides, but the message passing is usually facilitated by some nodes that need reliable connectivity – again a role for cloud or robust data center servers.

- Embracing Decentralized Infra: Projects at this layer also often showcase use of decentralized networks as part of their stack – e.g., using decentralized bootstrapping: some projects distribute their genesis state or node bootstrap info via BitTorrent or IPFS, so that bringing up a new node doesn’t depend on a central server. Likewise, they might use a network like Pocket for RPC distribution, so that not all users hit a single company’s endpoint. These choices further reduce central points of failure.

Layered modular architecture: execution rollups anchored to a DA layer like Celestia (Source: blog.celestia)

Summary for L2/Infrastructure Projects: Favor a modular blockchain architecture to leverage existing security and focus on your USP (whether it’s speed, specific functionality, privacy, etc.). Use cloud infrastructure tactically to achieve reliability and performance in the network’s early life, but design your node software to eventually be easy to run anywhere (so community validators can decentralize the network). In other words, you might start with a centralized-but-cloud-scalable operations model, but have a clear roadmap to remove trust assumptions (decentralize sequencers, open source all components, allow permissionless node running) as the platform matures. Investors and stakeholders in 2025 will ask about this plan, as the market has seen the drawbacks of “decentralization theater” and will push for truly robust architectures.

Conclusion

Web3 isn’t just about decentralization, it’s about resilience by design. Choosing the right cloud model can make the difference between an MVP that struggles to scale and a production-ready protocol that earns long-term trust.

By understanding how Web3 cloud architecture models compare and mapping them clearly to your project’s goals, teams can build systems that are not only faster and cheaper but more transparent, auditable and trustworthy.

At Twendee Labs, we help Web3 companies turn technical architecture into operational excellence. From modular blockchain infrastructure to hybrid cloud deployment, our team has built systems that support millions of users, reduce latency, and stay future-ready.

Whether you’re launching your first smart contract or scaling a full ecosystem, we deliver:

- Custom DA + execution architecture for rollups

- Hybrid NFT platform backend design

- DevOps pipelines tailored for decentralized environments

▶ Learn more here: From Vision to Victory: End-to-End Web3 Development

Follow us for more: