AI is advancing faster than ever but as capabilities grow, control is slipping further from developers’ hands. With GPT-4o, Claude, and Gemini dominating the market, the future of AI feels increasingly gated, tied to rising API costs, cloud vendor lock-in, and opaque systems beyond user control.

Yet in the shadows of this centralization, a different current is gaining momentum. Open-source AI models like Mistral and LLaMA are redefining what’s possible without billion-dollar infrastructure. They offer a radically different promise: the ability to build smarter, cheaper, and on your own terms. This blog examines three critical questions: How open is open-source AI in practice? What are the hidden costs of relying on proprietary giants? And most importantly can developers still compete, not by matching scale, but by owning the stack?

The Rise of AI Centralization

The current AI landscape is dominated by three major players, each representing a different approach to AI supremacy. OpenAI’s GPT-4o leads in general reasoning and creativity, Anthropic’s Claude excels in safety and nuanced conversation, while Google’s Gemini leverages the company’s vast data ecosystem and integration capabilities.

This centralization didn’t happen overnight. It’s the result of massive capital investments OpenAI’s partnership with Microsoft represents a $10 billion commitment, while Google has invested heavily in TPU infrastructure and talent acquisition. The computational requirements for training these models are staggering: GPT-4 reportedly cost over $100 million to train, requiring thousands of high-end GPUs running for months.

For developers, this centralization creates a paradox. On one hand, these models offer unprecedented capabilities through relatively simple API calls. On the other, they represent a fundamental shift in how AI capabilities are distributed. Instead of owning the technology stack, developers become dependent on external services, subject to pricing changes, usage limits, and policy modifications they can’t control.

The implications extend beyond individual projects. When a significant portion of the AI ecosystem depends on a handful of providers, we risk creating systemic vulnerabilities. Service outages, policy changes, or pricing adjustments can cascade through thousands of applications and businesses. This concentration of power raises fundamental questions about the future of AI development and whether there’s still space for independent innovation.

Is Open-Source AI Truly Open for Developers?

The open-source AI movement represents a compelling alternative to the centralized model, with Mistral and LLaMA leading the charge. But the question of accessibility goes deeper than just licensing, it’s about whether these models can realistically compete with their proprietary counterparts in real-world applications.

1. Local Deployment and GPU Accessibility

One of the most significant advantages of open-source AI is the ability to run models locally. Mistral 7B, for instance, can run efficiently on consumer GPUs with 16GB of VRAM—hardware that’s accessible to many developers. LLaMA 2’s 7B and 13B variants offer similar accessibility, making it possible for individual developers to experiment with and deploy advanced AI capabilities without relying on cloud infrastructure.

This local deployment capability offers several advantages beyond cost savings. Developers gain complete control over their inference pipeline, can customize the model’s behavior through prompt engineering and fine-tuning, and eliminate the latency associated with API calls. For applications requiring real-time responses or operating in environments with limited internet connectivity, this local capability is invaluable.

2. Fine-Tuning and Customization

The open-source model ecosystem has democratized fine-tuning through techniques like LoRA (Low-Rank Adaptation) and QLoRA (Quantized LoRA). These methods allow developers to adapt pre-trained models to specific domains or tasks using relatively modest computational resources. A developer can fine-tune a Mistral model for specific industry terminology or LLaMA for particular reasoning patterns using a single high-end GPU in a matter of hours or days.

This customization capability represents a fundamental advantage over proprietary models. While GPT-4o comparison shows superior general performance, a well-fine-tuned open-source model can often outperform larger proprietary models in specific domains. This specialization allows smaller teams to compete by focusing on narrow, high-value use cases rather than trying to match the broad capabilities of general-purpose models.

Real-World Performance: Small Models, Big Results

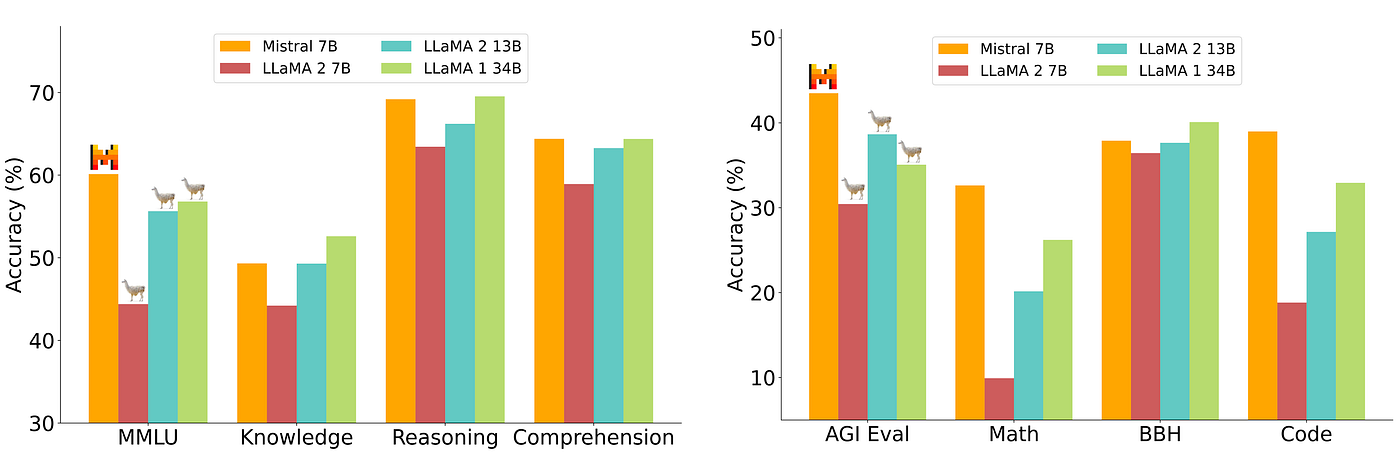

While open-source models may not match proprietary giants like GPT-4o in general-purpose reasoning, they often excel in focused tasks and not just hypothetically. Recent benchmark comparisons reveal that Mistral 7B consistently outperforms LLaMA 2 7B in tests like MMLU, Reasoning, Comprehension, and even AGI Eval.

Despite being smaller in size, Mistral demonstrates stronger capabilities across multiple reasoning-heavy benchmarks.

This proves that developers don’t need scale to stay competitive, they need smart model selection, local optimization, and deep domain tuning.

Accuracy benchmark comparison of Mistral 7B and LLaMA variants across MMLU, AGI Eval, and other tasks (Source: Mistral.ai).

3. Transparency and Community Innovation

Open-source AI models offer unprecedented transparency into their architecture, training process, and capabilities. Developers can examine the code, understand the model’s limitations, and contribute to improvements. This transparency extends to benchmarks and evaluation metrics, allowing for more informed decision-making about model selection and deployment.

The community-driven innovation around open-source models has been remarkable. Projects like Ollama have simplified local deployment, while tools like Axolotl and Unsloth have streamlined the fine-tuning process. This ecosystem of supporting tools and libraries often moves faster than proprietary alternatives, driven by the collective needs of the developer community rather than corporate roadmaps.

More importantly, these innovations aren’t limited to R&D teams. They’re already being applied in real-world enterprise settings powering localized AI tools that boost productivity and increase revenue. One such example is highlighted in Twendee’s analysis of AI-driven sales optimization for enterprises, where open-source AI plays a central role in enabling internal automation and customer personalization at scale.

III. Big Models = Big Lock-In?

The appeal of proprietary AI models comes with significant hidden costs that extend far beyond the advertised API pricing. As developers and organizations integrate these models into their core systems, they often discover that the initial simplicity masks deeper dependencies and escalating costs.

1. Scaling API Costs and Unpredictable Pricing

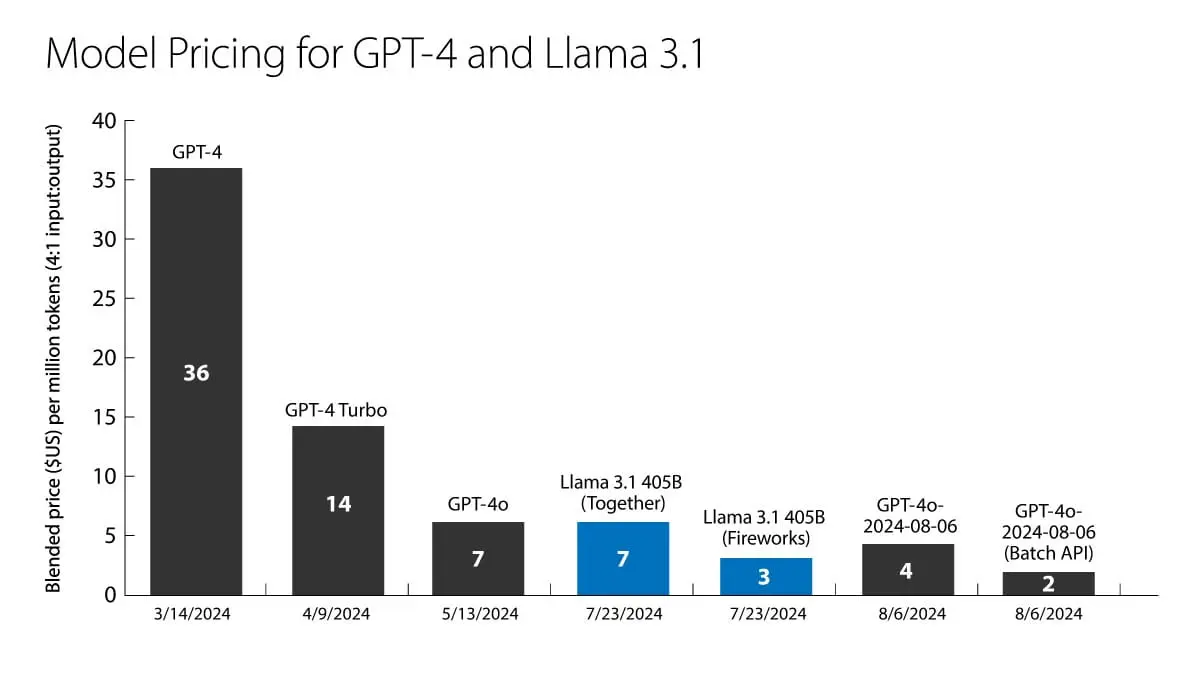

AI infrastructure costs can escalate dramatically as usage grows. A startup that begins with a few hundred dollars in monthly API costs might find themselves facing thousands or tens of thousands as their user base expands. GPT-4o, Claude, and Gemini all operate on token-based pricing models that can become prohibitively expensive for high-volume applications. While models like GPT-4o have made strides in reducing costs, they still remain notably pricier than some open alternatives.

Pricing comparison between GPT-4, GPT-4 Turbo, GPT-4o, and LLaMA 3.1 models as of 2024 (Source: OpenAI Dev Day, 2024)

Even though GPT-4o reduces the blended cost to $7 per million tokens, LLaMA 3.1 (via providers like Fireworks or Together) brings it down to as low as $3–$7 — and even $2 in batch settings. These savings compound significantly at scale, making open models increasingly appealing for production use.

The unpredictability of these costs creates planning challenges for businesses. Unlike traditional software licensing, where costs are predictable and scale linearly with usage, AI API costs can spike unexpectedly based on user behavior, prompt complexity, or changes in the underlying model architecture. This variability makes it difficult to accurately forecast expenses and can lead to unexpected budget overruns.

2. Loss of Control and Vendor Dependencies

When developers build on proprietary AI models, they surrender control over critical aspects of their technology stack. Model updates can change behavior unexpectedly, potentially breaking existing applications or altering the user experience. Content filtering policies can evolve, potentially blocking legitimate use cases. Rate limits and usage restrictions can throttle applications during peak demand periods.

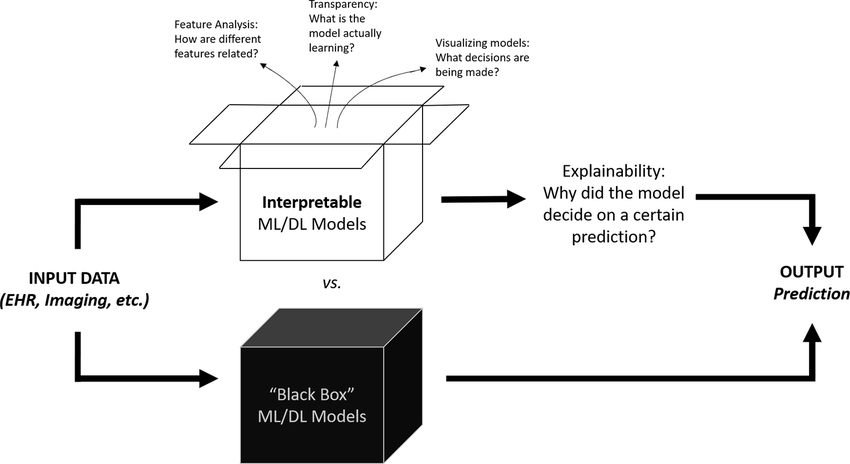

This lack of control extends to the fundamental capabilities of the models themselves. Developers cannot modify the underlying behavior, adjust the training data, or optimize the model for their specific use case. They’re essentially building on a black box that can change without notice, creating significant technical debt and business risk.

Visualizing the lack of transparency in proprietary AI systems (Source: [referenced study])

3. Infrastructure Dependencies and Cloud Vendor Lock-In

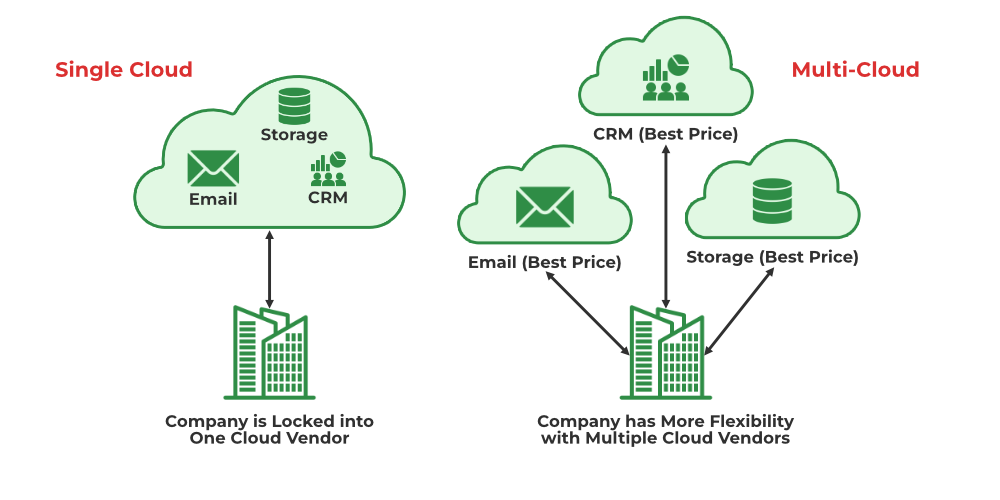

The major AI models are deeply integrated with specific cloud platforms. GPT-4o is tightly coupled with Microsoft Azure, while Gemini is integrated with Google Cloud Platform. This creates additional layers of dependency that extend beyond the AI model itself to encompass the entire infrastructure stack.

These dependencies can limit deployment options, increase costs, and create additional compliance challenges. Organizations that have standardized on a particular cloud provider might find themselves forced to maintain multi-cloud environments to access the best AI capabilities, increasing complexity and operational overhead.

Cloud vendor lock-in limits flexibility and raises long-term costs (Source: [referenced diagram])

IV. Who Can Still Compete and How?

Despite the dominance of proprietary models, there are numerous opportunities for developers to compete effectively using open-source AI. Success requires understanding where open-source models excel and how to leverage their unique advantages.

1. Solo Developers: The Ollama Advantage

Individual developers can compete by leveraging tools like Ollama to run Mistral models locally. This approach offers several advantages: zero ongoing API costs, complete control over the model behavior, and the ability to customize and fine-tune for specific use cases. A solo developer building a specialized code analysis tool, for instance, can fine-tune a Mistral model on relevant codebases and achieve performance that rivals or exceeds general-purpose models for their specific domain.

The key to success at this level is specialization. Rather than trying to build a general-purpose AI assistant, successful solo developers focus on narrow, high-value problems where domain expertise and customization can overcome the raw capability advantages of larger models. This might involve building AI tools for specific industries, creating specialized content generation systems, or developing AI-powered automation for particular workflows.

2. AI Startups: Fine-Tuning for Competitive Advantage

Startups can compete by leveraging the fine-tuning capabilities of open-source models to create specialized AI solutions. LLaMA’s architecture makes it particularly well-suited for domain-specific fine-tuning, allowing startups to create AI models that excel in particular areas such as legal document analysis, medical diagnosis assistance, or financial modeling.

This approach allows startups to compete on quality rather than scale. A startup focused on legal AI can fine-tune LLaMA on legal documents and case law, potentially achieving better performance than GPT-4o for legal tasks despite the model’s smaller size. This specialization, combined with the cost advantages of open-source models, can create sustainable competitive advantages.

3. SMBs: Compliance and Privacy as Differentiators

Small and medium businesses can compete by addressing compliance and privacy requirements that are difficult to meet with proprietary models. AI model accessibility for organizations dealing with sensitive data whether due to GDPR requirements, HIPAA compliance, or industry-specific regulations often requires on-premises deployment and complete data control.

Open-source models excel in these scenarios because they can be deployed entirely within an organization’s infrastructure, ensuring that sensitive data never leaves the corporate environment. This capability is particularly valuable in industries like healthcare, finance, and legal services, where data privacy and compliance requirements often outweigh the performance advantages of cloud-based models.

Conclusion

The AI landscape is becoming increasingly centralized, with GPT-4o, Claude, and Gemini claiming dominance in the general-purpose market. But this concentration of power leaves open a critical opportunity for developers, startups, and businesses that are willing to rethink the game entirely.

Open-source AI isn’t about matching scale. It’s about reclaiming control over infrastructure, cost, customization, and long-term direction. Models like Mistral and LLaMA aren’t just technical alternatives; they represent a shift toward autonomy and specialization. The developers who thrive in this new era will be those who build narrow, high-impact systems, optimized for their domain, deeply embedded in customer workflows, and free from external constraints.

The monopolization of AI is real but it’s not inevitable. The future belongs to those who own their stack, control their architecture, and move faster than centralized incumbents can react. That’s not just possible, it’s already happening.

At Twendee Labs, we help forward-thinking teams apply open-source AI where it matters most from low-latency deployment to domain-specific fine-tuning and internal automation. If you’re ready to compete differently not by chasing the biggest model, but by building the smartest system we’re here to help you lead the way.

The next AI advantage won’t be about who has more power but who has more control. And that starts with the decision to build on your terms. Follow our insights on X and LinkedIn