“AI regulation” has become one of the most pressing debates of our time. As artificial intelligence permeates everything from healthcare and finance to education and transportation, experts and policymakers worldwide are grappling with a critical question: Should we regulate AI with the same rigor as medicine (with strict pre-approval trials and safety checks), or treat it more like software (with agile updates and industry-led standards)?

This global debate is far from academic – it will shape how AI innovation unfolds and how society harnesses (and controls) AI’s immense potential. In this article, we’ll explore both sides of the argument, examine sector-specific challenges (especially in healthcare, finance, and education), and outline emerging ethical AI frameworks and AI safety standards that aim to strike a balance.

This image shows the latest NIST standards for AI risk management, ensuring the safety and reliability of AI systems (Source: VLC Solutions)

The Global Debate on AI Regulation

As artificial intelligence becomes more powerful and integrated into critical areas of life, the global conversation around AI regulation is intensifying. In countries like the UK, some leaders believe AI should be governed with the same rigor as medicine or nuclear energy, especially when it’s used in high-stakes domains like healthcare, finance, or autonomous vehicles. They argue that without proper oversight, the risks of harm, from algorithmic bias to life-threatening errors, are too great to ignore.

However, many experts in the tech industry push back, warning that overly strict regulations could hinder progress. AI, like much of modern software, thrives on rapid iteration and real-world testing. Requiring lengthy approvals, as in the pharmaceutical industry, might slow innovation to a crawl and prevent society from reaping the enormous benefits AI can offer.

To reconcile these views, some propose a risk-based regulatory approach: not all AI systems need the same level of control. Instead, oversight should depend on how and where AI is used. This middle-ground thinking has shaped policies like the EU AI Act, which imposes lighter rules for low-risk applications and stricter obligations for high-impact use cases like credit scoring or medical diagnosis. It’s a sign that while the debate continues, a more nuanced path forward is beginning to emerge.

AI in Healthcare (Medical-Grade Regulation)

When it comes to healthcare, the argument for treating AI “like medicine” is compelling. In this domain, AI systems from diagnostic algorithms to surgical robots directly impact patient lives. A faulty medical AI can cause injury or death, just as an unsafe drug or device can. Not surprisingly, regulators view many healthcare AIs as “Software as a Medical Device” (SaMD), meaning they fall under the purview of health authorities like the U.S. FDA and must meet similar safety and efficacy standards.

In fact, the EU’s landmark Artificial Intelligence Act explicitly classifies AI used for medical purposes as “high-risk,” requiring developers to implement risk mitigation, use high-quality training data, ensure human oversight, and undergo conformity assessments before such AI systems reach patients. This mirrors how a new medical device or therapy would be vetted, with rigorous checks to protect patient safety.

Healthcare regulators are adapting to AI’s evolving nature. Unlike static medical devices, AI models can update over time, prompting the FDA to propose “predetermined change control plans” that allow safe, ongoing improvements without restarting the entire approval process. The aim: balance medical-grade rigor with software-like agility.

Patients and doctors must be able to trust AI just as they trust a drug or diagnostic device. A misdiagnosis by AI could be as dangerous as a faulty implant.

- Data privacy: Are sensitive health records protected?

- Bias mitigation: Does the system work equally well across populations?

- Accountability: Who is liable if something goes wrong?

The World Health Organization emphasized in 2023 that strong regulatory oversight is essential before any AI tool enters mainstream clinical use, including mechanisms for liability and patient redress if AI causes harm.

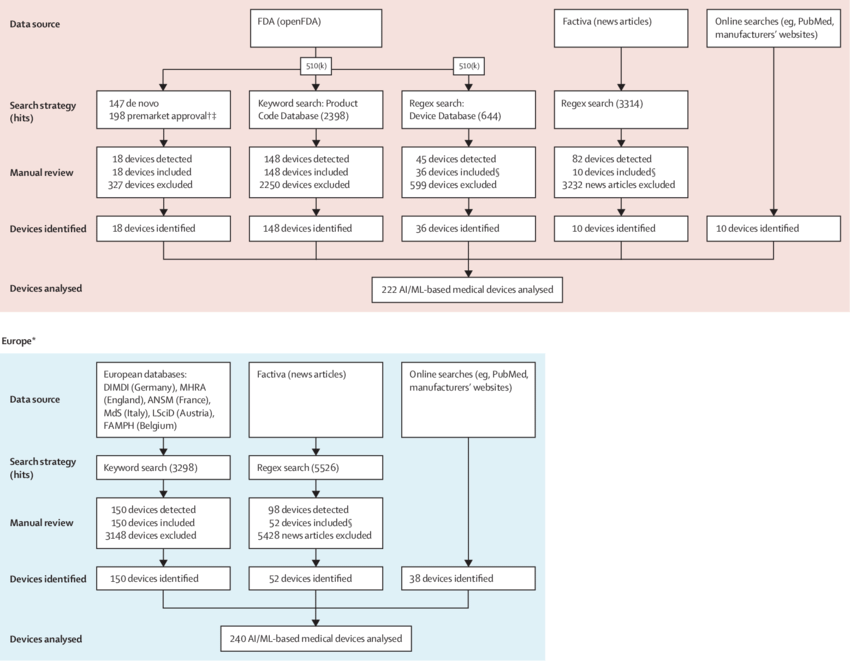

Regulatory lifecycle of AI in healthcare (SaMD), showing key steps like risk classification, data assurance, and oversight by bodies like the FDA and EU. (Source: ResearchGate)

In healthcare, lives are at stake, so AI solutions face rightly high bars for approval and monitoring. The process is becoming nearly as stringent as that for new medical treatments. But what about other fields like finance?

AI in Finance: Compliance, Risk and Trust

While finance doesn’t deal with life-and-death scenarios, it involves people’s livelihoods, making it a critical area for AI regulation. Banks and fintech firms are rapidly using AI for credit scoring, trading, fraud detection, and customer service, but each carries significant risks like bias, misinformation, or market instability.

Unlike healthcare, finance already has strong compliance frameworks, and regulators are extending these to AI:

- Anti-discrimination laws like ECOA and FCRA apply to AI in lending and credit, biased algorithms could be illegal.

- Financial regulators require transparency, fairness, and auditability of AI systems.

- Data privacy rules (e.g., GDPR) govern how financial AI handles customer information.

- EU AI Act classifies some finance-related AI (like credit risk tools) as “high-risk,” demanding documentation and human oversight.

A unique twist: some firms use AI to monitor compliance with regulations, essentially, AI policing AI.

Key regulatory considerations for AI in healthcare: balancing innovation with safety (Source: Gartner)

Unlike the healthcare model, most financial AI systems don’t need pre-approval before deployment. The industry follows a “deploy first, regulate later” model, but with strict consequences if the AI causes harm or breaks the rules. In 2023, global financial firms faced $6.6B in fines for compliance failures, reinforcing the high stakes.

Looking ahead, expect tighter oversight with a focus on:

- Bias mitigation and explainability

- Clear documentation and accountability

- Aligning AI innovation with consumer trust and financial stability

For leaders in finance, regulation isn’t just red tape, it’s a foundation for long-term trust.

AI in Education: Low Stakes or Future Regulation Frontier

AI is gaining traction in classrooms, from automated grading and tutoring systems to admissions and proctoring tools. While it doesn’t yet face strict regulation, its impact can still be life-changing:

- A biased admissions algorithm could alter a student’s future.

- Faulty grading tools may unfairly affect academic progress.

- AI proctoring raises serious privacy and ethics questions.

Currently, most education AI tools operate under general laws like GDPR or FERPA, with no dedicated regulatory body or pre-approval requirements. The approach is still largely “treating AI like software.”

But change is coming:

- The EU AI Act would classify grading and assessment tools as “high-risk”, triggering stronger compliance requirements.

- Local U.S. school districts are already experimenting with AI policies.

- Global conversations are focusing on transparency, bias, and the rights of parents to understand how AI systems affect students.

In the absence of hard laws, many schools and ed-tech firms are turning to ethical frameworks, emphasizing:

- Explainability: AI decisions should be understandable and challengeable by teachers and students.

- Human-in-the-loop: AI should support, not replace, educators.

- Student protection: Tools must respect age, privacy, and fairness.

Core principles for ethical AI in education: fairness, transparency, data protection, and human oversight (Source: EDDS Institute)

Education may not be the first frontier of AI regulation, but as AI becomes more embedded in shaping young minds, it won’t remain lightly regulated for long. Proactive ethical design today may prevent heavy regulation tomorrow.

Software-Style Agile Innovation vs. Hard Regulatory Compliance

The debate over AI regulation centers on whether to approach it like medicine or software. Regulating AI like medicine means strict pre-market evaluation, certifications, and ongoing oversight to ensure safety and efficacy, similar to the process for drugs or medical devices. This model emphasizes caution, professional accountability, and harm prevention, particularly in high-risk sectors like healthcare, finance, and autonomous driving. In contrast, regulating AI like software allows for quicker innovation, with issues addressed post-deployment via updates. This model prioritizes flexibility, with industry standards guiding development, while governments step in only when major problems arise, such as data breaches or fraud.

Proponents of the software model argue that AI evolves too quickly for rigid regulations, citing examples like generative AI models that outpace regulators. They advocate for frameworks like voluntary ethical AI principles and self-regulation through industry sandboxes, allowing rapid development while ensuring safety. However, medicine-style regulation advocates counter that AI’s potential for catastrophic harm (e.g., in healthcare or finance) warrants proactive controls, including pre-market validation, similar to drug approval processes. A hybrid approach is emerging, with high-risk AI (in healthcare, finance) subject to stricter controls, while low-risk AI applications enjoy lighter regulation, as seen in the EU AI Act.

For businesses, understanding the risk level of AI products is crucial. High-risk AI, such as medical diagnostics or financial algorithms, requires thorough compliance planning from day one, including audits and bias mitigation. On the other hand, low-risk AI applications like chatbots may be developed with more freedom, but still need to adhere to basic safety standards and privacy laws.

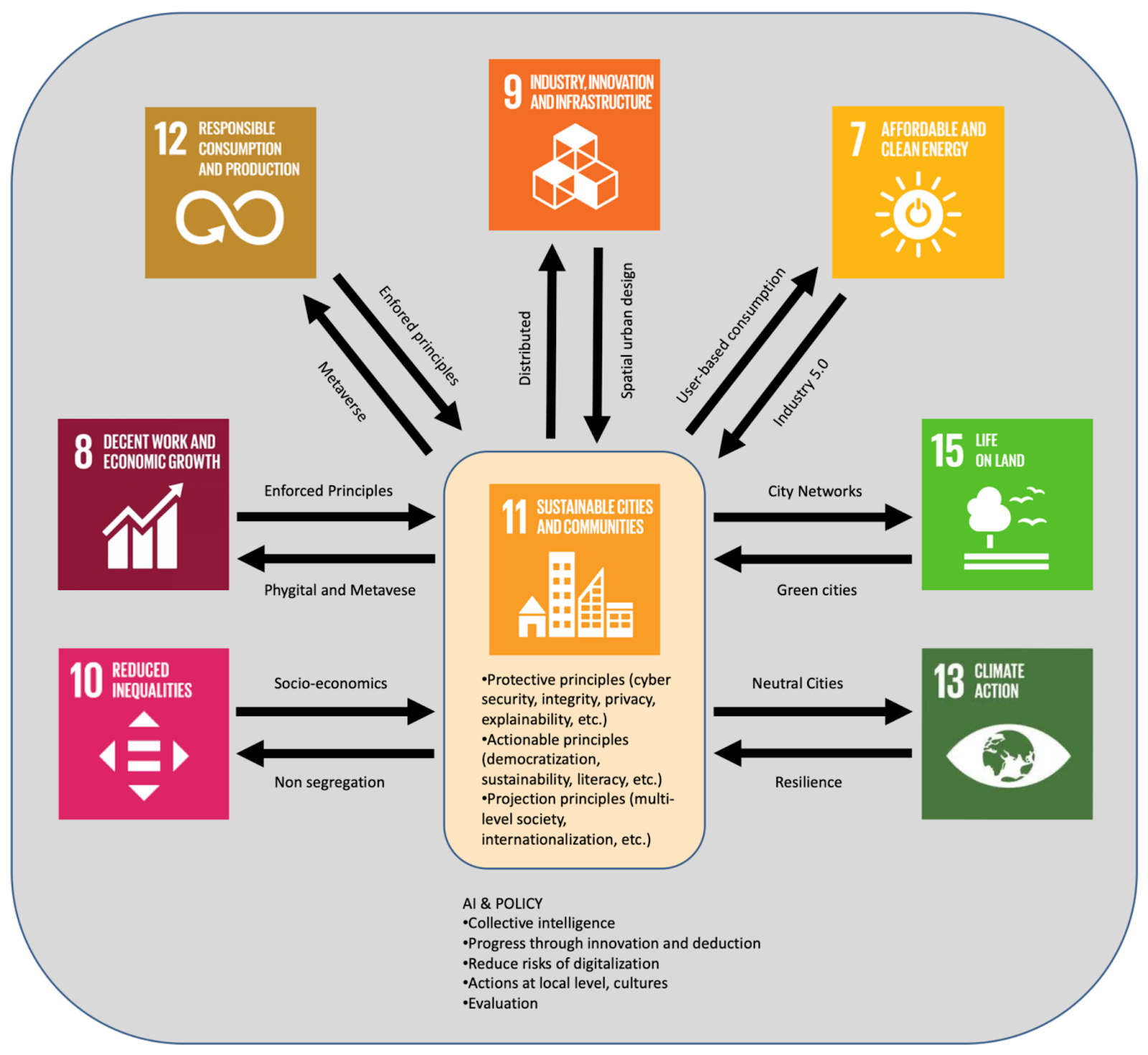

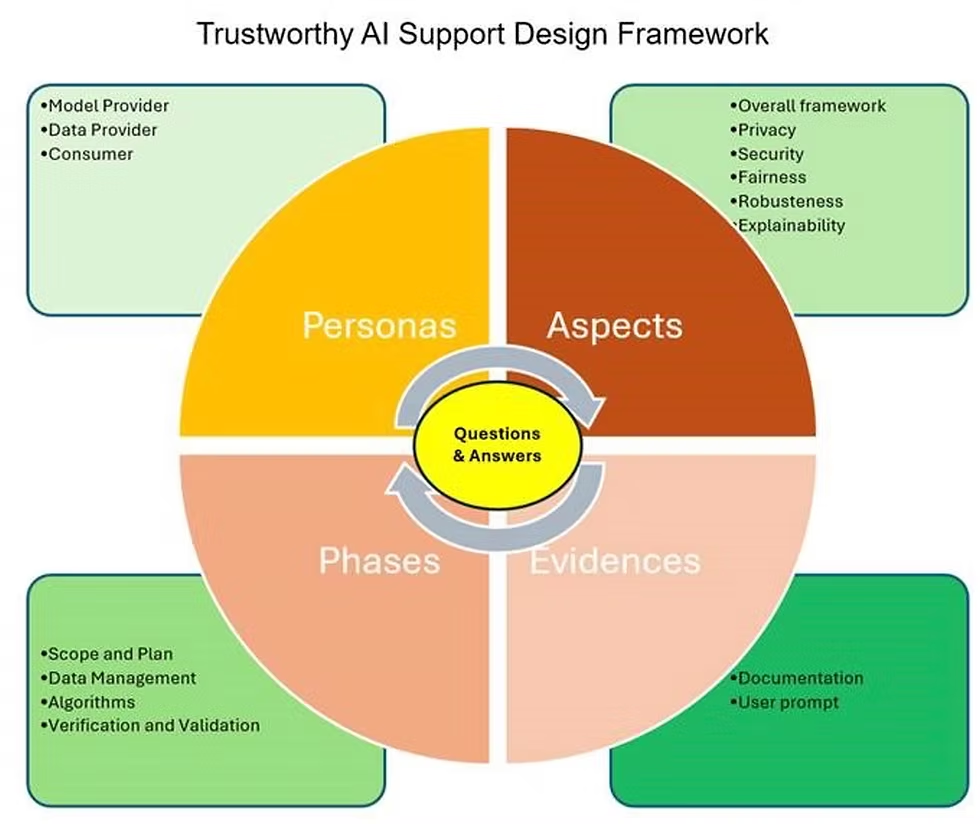

Ethical AI Frameworks & Safety Standards

In addition to regulatory frameworks, there’s a growing focus on ethical AI standards to guide responsible AI development. Corporate principles (from companies like Google and Microsoft) emphasize fairness, transparency, and accountability, setting internal standards for AI projects. Meanwhile, standards bodies like IEEE and ISO propose technical guidelines, while organizations like NIST provide risk management frameworks to help companies identify and mitigate AI risks. These frameworks help businesses maintain trust, preempt stricter regulations, and ensure responsible AI deployment.

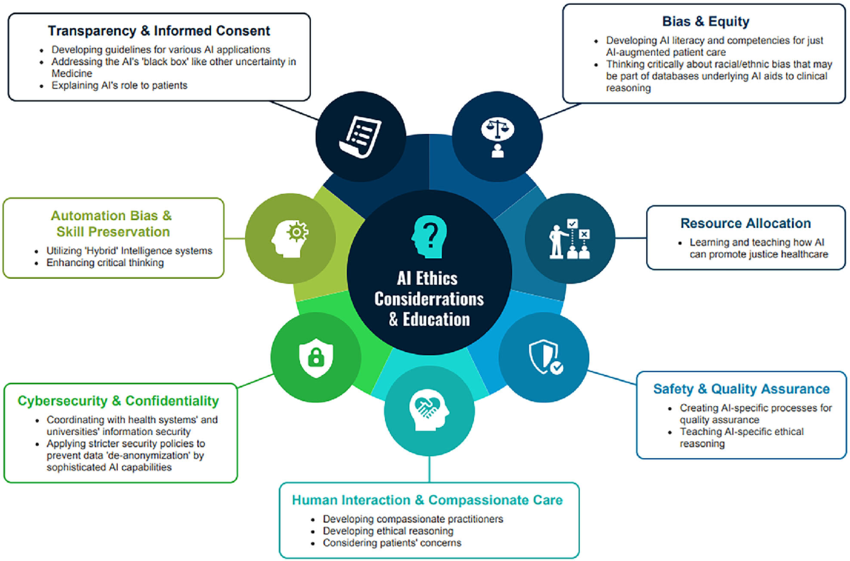

This diagram outlines the Core principles of AI ethics frameworks, including fairness, transparency, and accountability. (Source: MNP Digital)

Adopting these frameworks is increasingly seen as a competitive advantage for tech firms, helping to build consumer and regulator confidence. For businesses, aligning with these ethical AI principles is not just about compliance, it’s a strategic step toward becoming trusted leaders in the AI space.

Conclusion

AI is entering a new era of regulation. Global laws and ethical standards are emerging rapidly, pushing organizations to integrate compliance and responsibility into their AI strategies from the start. The core challenge is not choosing between “medicine-style” or “software-style” regulation, but combining caution with innovation.

High-impact AI (like in healthcare and finance) will face stricter oversight, while lower-risk tools can still evolve with agility. Smart regulation doesn’t hinder innovation, it builds public trust and paves the way for safer, broader adoption.

At Twendee, we specialize in building AI and blockchain systems that balance cutting-edge innovation with regulatory compliance. From automated workflows to secure analytics, we help businesses deploy AI that’s scalable, auditable, and future-ready.

Read our latest article: How Much Does AI Workflow Automation Really Cost? (Full Breakdown 2025)

Visit us twendeelabs.com or connect with us on LinkedIn and X