Despite record AI investments and soaring expectations, many enterprises are already finding their strategies falling short. Models are deployed, dashboards are built, but outcomes remain underwhelming. Why? Because the core problem isn’t the technology. It’s how AI implementation is being approached.

While the hype promises transformation, the reality is a growing list of stalled initiatives, siloed pilots, and tools that never make it past the proof-of-concept stage. This blog uncovers why most AI strategies break down and what to do differently.

When AI Implementation Ignores Workflow, Results Fall Flat

Many organizations treat AI implementation as a technology overlay: plug it into an existing system, automate a few processes, and expect transformation. But workflows aren’t neutral plumbing they’re loaded with context, decisions, exceptions, and human nuance. When AI is introduced without redesigning these layers, the results are often cosmetic at best.

Let’s break it down:

- Legacy workflows aren’t ready for intelligent systems. AI thrives on structured input, clear feedback loops, and consistent application logic. But many enterprise workflows involve fragmented tools, redundant approvals, and human patchwork, all of which disrupt automation.

- AI integration without operational context creates friction. For instance, an AI that flags risks in procurement might be technically accurate, but if procurement officers don’t understand the model’s reasoning or if the review loop still requires manual overrides the system slows everyone down instead of helping.

- Team misalignment breeds distrust. When models are deployed without frontline involvement, employees often see them as “black box” mandates. As noted in Dexian’s 2024 report, “AI adoption stalls when people don’t see how the system makes their work easier or safer.”

Case in point: IBM Watson in healthcare

At MD Anderson, Watson failed to deliver usable treatment recommendations due to poor alignment with clinical workflows and limited real-world training data. Doctors could not integrate the system into daily routines, and trust deteriorated. Despite years of investment, the $60M project was shelved.

Watson for Oncology interface analyzing medical records (Source: Screen capture via IBM)

This example underscores a key lesson: AI is not a productivity layer unless it connects to real decisions, roles, and outcomes. Workflow readiness, not model complexity, is the more common barrier to success.

What Works: AI Implementation That Solves Real Pain Points

To succeed, AI must do more than exist, it must solve for the right problem at the right touchpoint. The difference between a failing AI pilot and a high-impact deployment often comes down to one question: Was this built to remove friction or just to demonstrate capability?

Here’s what defines a high-functioning AI implementation:

1. Focusing on Specific Operational Friction Points

Instead of asking what AI can do, successful teams begin with what is slowing them down. These friction points are often hidden in everyday tasks such as duplicated work, long approval cycles, or data re-entry between systems.

For example, a global logistics company identified invoice reconciliation as a major operational bottleneck. The process required manual cross-checking between purchase orders, supplier formats, and local tax codes. Rather than deploying a broad AI system, the company implemented a focused model to extract and validate invoice data, sending only exceptions for human review. This led to a 60% drop in resolution time and improved vendor relationships without changing the entire stack.

This type of targeted AI implementation often drives more value than complex initiatives. It solves real problems and improves systems that already matter. It also reflects a growing shift in how leadership evaluates success. Venture firms, for example, increasingly assess a company’s ability to define and automate internal workflows. Explore why AI workflow clarity is becoming a strategic differentiator.

2. Embedding AI into Business Logic and Decision Triggers

An accurate model that is disconnected from operational flow often leads to confusion rather than efficiency. For AI to deliver impact, it must operate within the logic of how work already happens, including compliance steps, handoff points, and internal policies.

High-value use cases tend to place AI inside existing decision points, not in separate dashboards. A churn prediction model, for instance, creates more impact if it initiates a customer success sequence or adjusts a contract offer in real time. Similarly, internal tools such as code assistants are more trusted when integrated into pull request workflows instead of acting after changes are merged.

AI performs best when it augments clear moments of choice and is accountable to existing rules, not when it adds parallel logic to already complex systems.

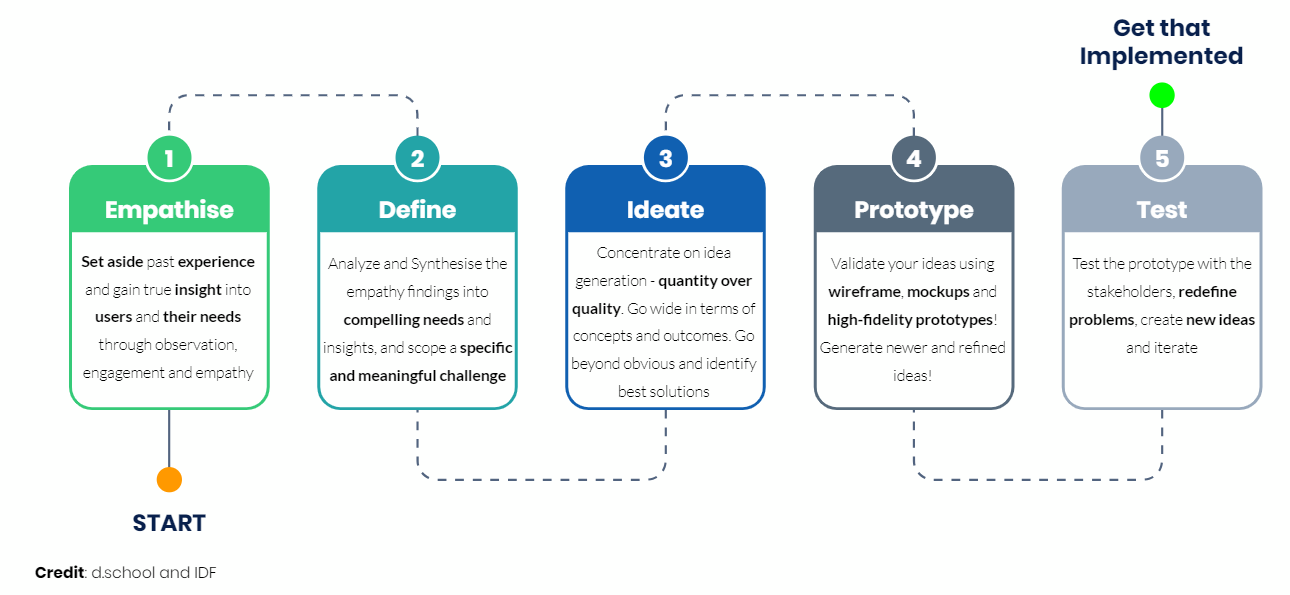

3. Prioritizing End-User Involvement in AI Design

AI that feels imposed is often ignored. Adoption improves significantly when teams help shape how and when AI shows up in their workflow.

User-centered design process for iterative solution testing (Source: dschool and IDF)

This is not just about interface design. It requires alignment with how users make decisions. High-trust implementations often begin with assistive roles, allowing users to compare model suggestions with their own actions before enabling automation. This staged approach builds familiarity, highlights edge cases early, and reduces resistance.

Pushing AI too quickly into critical workflows often backfires. Effective strategies introduce AI through low-friction tasks and let it prove its value over time.

The Fix: Internal Use Cases, Adoption Culture, and the Right Tools

Fixing a broken AI strategy does not start with new models. It starts with designing smarter implementations around internal workflows, trust-building, and sustainable tooling. The most resilient organizations adopt AI in three interconnected ways:

1. Start with Internal Use Cases That Are Low-Risk but High-Friction

Internal workflows are the safest and most telling environments to test AI. These are areas where teams lose time due to manual handling, duplicated inputs, or information retrieval — not because the work is hard, but because the system is inefficient.

A strong example comes from UiPath, which implemented AI to automate internal document processing tasks such as invoice sorting and employee onboarding. The AI system reduced manual document handling time by 30% and freed up thousands of hours annually. These early internal wins gave the company the insight and confidence to extend automation across its customer-facing tools.

UiPath invoice automation interface with validation overlay (Source: UiPath)

Internal use cases are easier to monitor, adjust, and scale. They also allow technical teams to improve data governance and experiment without immediate customer risk.

2. Treat Adoption as an Organizational Change Process

Training alone does not drive team adoption. AI changes how decisions are made, how people collaborate, and how accountability is shared. That means teams must see not just how to use a tool but why it improves their work.

Effective companies integrate AI into performance metrics, documentation processes, and team rituals. For example, a team might include AI-generated task summaries in daily standups or use AI recommendations as a checkpoint during quarterly reviews. These touchpoints reinforce trust and build a culture of AI-assisted work, not AI replacement.

Without this cultural layer, even the most capable tools will sit unused or worse cause active pushback.

3. Choose Tools That Fit the Environment, Not Just the Model

The most advanced model is useless if it cannot be observed, controlled, or trusted. AI tools should be chosen not for accuracy alone but for their ability to operate within the actual constraints of a team’s workflow.

This includes:

- Role-based permissions to control AI actions

- Human-in-the-loop checkpoints to enable oversight

- Integration hooks with project management, documentation, or cloud systems

- Clear fallback mechanisms when the model fails

A report from MIT Sloan Management Review found that 57 percent of AI deployment failures stemmed from tooling complexity and poor integration with existing platforms, not model performance itself.

Conclusion: Start with Clarity, Not Just Models

AI does not fail because of weak algorithms. It fails when implementation skips over workflows, overlooks user behavior, and disconnects from the business logic it’s meant to support. Sustainable AI success begins with clarity about where the pain is, who it affects, and how AI can reduce friction rather than add complexity.

At Twendee Labs, we specialize in building workflow-aligned AI systems that solve real internal inefficiencies from automation-ready prototypes to tools that teams actually trust and use.

To explore how Twendee helps companies make AI work from the inside out, visit our site, connect on LinkedIn, or follow us on Twitter/X.